| Applications | |

Science of interoperability

To address the complex connectedness of Earth phenomena, we need to study interoperability as a discipline in its own right |

|

Over the past 100 years the world’s total population has quadrupled – from 1.6 billion to 6.6 billion. (Footnote: Population Bulletin, “World Population Highlights: Key Findings From PRB’s 2007 World Population Data Sheet” http:// www.prb.org/Articles/2007/623WorldPop. aspx.) In many parts of the world we see growing poverty. We now face increasingly worrisome resource constraints and environmental difficulties, and many informed observers question the planet’s ability to sustain its current human population, despite our technological achievements. (Footnote: “Human Carrying Capacity of Earth”, Gigi Richard, Institute for Lifecycle Environmental Assessment, ILEA Leaf, Winter, 2002 issue. http:// www.ilea.org/leaf/richard2002.html)

Managing a “soft” leveling off of population growth and resource consumption will require, among other things, that we make the best possible use of information technologies, and particularly geospatial technologies. Viewed separately, these technologies are advancing rapidly and are being utilized in a growing number of ways. But harnessing them together to meet the challenges of the 21st Century will require a new kind of knowledge, new policies, new institutional commitments, and imaginative reassessment of our ways of using them.

The Open Geospatial Consortium, Inc. (OGC), founded in 1994, has established a global, commercially driven consensus process for developing geoprocessing interoperability standards. The OGC Interoperability Institute (OGCii), founded in 2006 by OGC directors, promotes research in the area of “interoperability science,” to address 1) the need for new knowledge related to the convergence of geospatial technology with related enabling technologies, a convergence enabled by geospatial interoperability and 2) the need for coordinated research community input into the OGC standards process.

Surging need for interoperability

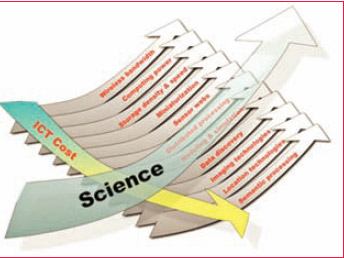

Individually, geospatial technologies and related supporting information technologies are advancing rapidly, as shown in figure 1.

The problem we face does not concern any inadequacy in the basic information technology infrastructure for geospatial interoperability. It is clear that we can expect adequate progress in all the relevant IT domains we identify as essential. The problem is rather that we still have difficulty using all the various kinds of data and online processing services available to support an integrated scientific methodology. Our wealth of data and the unifying power of interoperable information technologies actually serves to illuminate the traditional scientific and institutional barriers to the construction of the relevant complex models and analysis which are needed to represent and address many of today’s most complex scientific and societal challenges.

Interoperability enables resource sharing

Resolving humanity’s resource and environmental difficulties requires that we learn more about the often complex relationships among a wide range of Earth features and phenomena. To do this, one thing scientists must be able to do is find and use each other’s data and services (online processing services) and integrate these into complex models and analyses. Such sharing matters not only for cross-disciplinary studies, but also for longitudinal studies, independent verification of results, and collaboration on collection of expensive shared data sets and development of shared Web services. Such sharing is enabled to a large degree by the OpenGIS® Implementation Specifications developed by the OGC in cooperation with other standards organizations. These specifications provide detailed engineering descriptions of open interfaces, encodings and online services that enable diverse systems to operate together in a “loosely coupled” client server environment.

To enable resource sharing, the first step is to simply make data and services available on the web through open interfaces. The next step is to carefully describe the data and services in machine-readable and human-readable metadata that conform to ISO standards. By means of this metadata, the data and services can be registered in online catalogs that make them easily discovered, evaluated and accessed. Fortunately, great progress has been made in the area of metadata and catalogue standards. Products are available that make it relatively easy to develop “application schemas” based on ISO standard metadata schemas, and catalog products are available that implement the OGC’s OpenGIS Catalog Services – Web Implementation Specification. In many cases, these are sufficient to make data, online services and encodings easily discovered, evaluated and used by humans and software using the Web.

Addressing complexity

We simply cannot avoid complexity and the growth of complexity as we contemplate and attempt interoperability. We must accept the need for diverse conceptual abstractions, classification schemes, data models, processing approaches and recording methods. We must also accept the compounding of complexity when multiple datasets embodying this diversity are used together. Standards are necessary in managing data complexity, but they may not be sufficient. Complexity is as dangerous as it is unavoidable. Like physicists employing mathematical theories in their attempts to describe the cosmic and sub-atomic worlds, geoscientists building computer models of Earth phenomena depend precariously on the accuracy of their data and the validity of their assumptions. The artifacts of modeling can, sometimes, be mistaken for observations of reality. Other tools like finite element analysis and Fourier transformation that derive much of their utility from computers underwent rigorous community review as they became common tools in the science and engineering toolbox. We unavoidably delve deeper into abstractions as we explore complex relationships between environmental factors, and we need ways of determining whether our inferences and conclusions are valid. Consciousness about the need for validation and the hazards of abstraction compels us to study interoperability.

To address the complex connectedness of Earth phenomena, we need to study interoperability as a discipline in its own right. We need to look for basic principles and practices that can direct our efforts to converge different application domains and to converge geomatics with computer modeling, semantics, high performance computing and other technologies.

Consider, for example, climatology. Knowledge about climate change is of the utmost importance, and climatology is a multidisciplinary field. Geospatial data includes temporal and spatial information about atmospheric gas and particulate distributions and circulations, terrestrial radiation and absorption patterns, weather regimes, bolide impact studies, plant distributions, industrial plant distributions, and data developed by hydrologists, paleoclimatologists, sedimentologists, bathymetrists, oceanographers, demographers and others. Climatology is heavily dependent on computer models, and the computer models need to assimilate many of these diverse kinds of data. Each kind of data has peculiar characteristics and limitations. In many cases, the limitations need to be quantified in statistical parameters within the models. Understanding geomatics and geoprocessing interoperability in the contexts of modeling, semantics, high performance computing and other technologies is clearly of great importance in this context.

Furthermore, getting and using Earth science data involves other considerations besides accuracy and validation. The semantic facilities for interdisciplinary sharing of diverse spatial resources, and the digital rights facilities for such sharing, for example, must smoothly transcent the technical boundaries of Web resources such as search tools, sensor webs, grid computing, location services, etc. And all of these must be harnessed in making huge volumes of data and services accessible to modeling and simulation tools. Together, these challenges demand that we embark on a concerted global effort to study the interoperability-enabled convergence of modeling, semantics, high performance computing and other technologies that together might support the fullest possible use of geospatial data and services in the rapidly evolving ICT environment.

OGCii

Commercially derived interoperability requirements have been the main drivers shaping the evolution in the OGC of the global standards that are used by both practitioners and researchers. Commercially derived interoperability requirements are a great boon to researchers, but they may produce standards that meet only a subset of the interoperability requirements of studies of complex Earth phenomena. Standards developers must, by definition, seek “common denominator” approaches, approaches that are “as simple as possible” for good practical reasons. However, this goal frequently runs counter to the need to have standards that are “as complex as necessary” to maintain rigor in scientific specialties.

The OGC Interoperability Institute seeks to coalesce interest groups who have a stake in these issues, with the intention of focusing the research community’s interoperability requirements for insertion into the commercially driven standards process and also focusing attention on the need to make geospatial interoperability the subject of concerted study.

|

|

||||

|

|

|||||

My Coordinates |

EDITORIAL |

|

News |

INDUSTRY | GPS | GALILEO UPDATE | LBS | GIS | REMOTE SENSING |

|

Mark your calendar |

APRIL 2008 to DECEMBER 2008 |

(No Ratings Yet)

(No Ratings Yet)

Leave your response!