| GNSS | |

GREENPATROL: A Galileo Enhanced Solution for Pest Detection and Control in Greenhouses with Autonomous Service Robots

One of the key differentiators for the GREENPATROL solution is its ability to autonomously navigate between – and within – greenhouses so that it can execute its planned tasks in different locations. This makes the solution very flexible, but is a challenging undertaking in the greenhouse environment |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Introduction

The Horizon 2020 (H2020) GREENPATROL project has developed an autonomous robotic solution that has the ability to navigate inside greenhouses while performing early pest detection and control tasks. This paper describes the main developments within the project, including precise positioning and navigation within the light indoor environment of a greenhouse, perception with visual sensing for online pest detection, and strategies for manipulation and motion planning based on pest monitoring feedback.

Motivation for GREENPATROL

With issues such as population growth, climate change, resource shortages and increased competition, a key challenge today for agriculture is to produce more with less. Greenhouses can protect crops from adverse weather conditions, allowing year-round production and consistency of environment in order to increase yields, whilst modern crop management approaches can significantly reduce water usage. However, in a closed environment such as a greenhouse, any infection can spread rapidly without timely pest detection and treatment, which will negatively affect production.

To prevent this, crop inspection to identify pests needs to be executed in a rigorous manner so that the necessary treatment can be applied as early as possible in order to minimise damage. Manual inspection methods were traditionally used, but these are very labour intensive and inefficient, and hence less practical as the area covered by greenhouses increases. As an alternative, automatic inspection using computer vision has become more common. This approach is well suited to carrying out repetitive tasks and can also improve the accuracy of the detection, therefore improving productivity and reducing the use of pesticides.

The main objective of the GREENPATROL project was to design and develop an innovative robotic solution for Integrated Pest Management (IPM) in greenhouse crops, with the ability to navigate inside greenhouses whilst performing pest detection, treatment and control tasks in an autonomous way. It is noted that there are already several automatic Integrated Pest Management (IPM) tools and techniques available ([1], [2], [3]), although none of them use robotic platforms as part of the detection system. The main advantage of using a mobile robotic platform is the fact that it can cover a large greenhouse (or multiple greenhouses) with just a few cameras, because the robot will move around between locations and inspect plants in different sections of the greenhouse. Also, unlike systems that might use fixed guide rails, the robot platform does not require specific fixed infrastructure, and can even cope with changes to the greenhouse layout, making it far more flexible and easier to deploy.

Overview of solution

The GREENPATROL solution consists of the following main elements:

• A robot platform to carry the equipment required for inspection and treatment, and with the ability to travel throughout the greenhouse, including performing precise manoeuvres in tight spaces,

• A localization and navigation system to localize the robot platform within the greenhouse and navigate to the required destination for performing a task, whilst avoiding obstacles,

• A pest detection system, to automatically identify pests (at egg, larva and/or adult stage) that are infecting plants in the greenhouse,

• A control and management function for planning the inspection and treatment regimes, and to interface with the farmer to report on findings and action taken and to take on board updated instructions and plans.

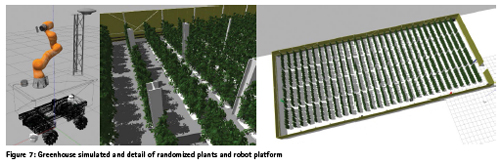

For the GREENPATROL prototype, the robot platform used is a Segway® Flex OMNI. This platform is chosen because it has true holonomic motion, with the ability to manoeuvre in tight spaces. The platform also includes wheel encoders and an Inertial Measurement Unit (IMU), the data from which are combined to get an improved odometry estimation.

Nevertheless, it is noted that for commercial deployment in different types of greenhouse a different platform, smaller and lighter than the prototype version, will be used.

Positioning and navigation

One of the key differentiators for the GREENPATROL solution is its ability to autonomously navigate between – and within – greenhouses so that it can execute its planned tasks in different locations. This makes the solution very flexible, but is a challenging undertaking in the greenhouse environment.

As described in [4], the problem of autonomous navigation of mobile robots is divided into three main areas: localization, mapping, and path planning. Localization is the process by which the robot can determine its location and pose with respect to its environment. On the other hand, mapping combines the robot location and pose, and observations of its surroundings, into a consistent model of the local environment. Finally, path planning uses knowledge of the robot location and the map in order to calculate the best route through the environment so it can reach the required location(s) to complete its tasks.

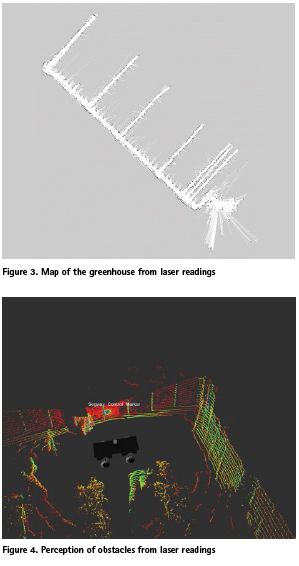

The key to this is the generation of a realistic map of the environment. To build a realistic map, the robot must know its position and orientation (pose) with respect to local surroundings at all locations. However, to localize itself within the environment, the robot needs an accurate map. This apparently circular problem is known as Simultaneous Localization And Mapping (SLAM) [5]. Popular approaches for SLAM implementations use laser range information to directly estimate the 3D locations of the imaged points, and combining this with odometry from the robot, and inertial systems, to localize the platform within the map.

However, the localization from odometry and inertial sensors can be subject to rapid error growth if not constrained, and even with accurate localization it is very difficult to maintain a realistic and up-to-date map that a visual-based system could perform an efficient matching. This is because the greenhouse environment is not static and will change over time as plants grow, the light intensity in the greenhouse changes, and the greenhouse layout is modified. There are even transient obstacles (such as people and other equipment) that need to be avoided.

For these reasons, the GREENPATROL solution has an absolute localization function in order to provide real-time absolute position and heading information in a global reference frame. Having the absolute position and orientation means that the robot platform can always know where it is in relation to the greenhouse (inside or outside, whereabouts in the greenhouse). The navigation part can then plan a path through the map from the known location to the required destination, and the relative localization (with laser measurements) can be used to avoid obstacles and to take over the positioning part in cases where the absolute locations performance is degraded. The absolute localization information is also logged for geo-referencing to record where actions have taken place (e.g. locations of plants where pests have been detected, locations of plants where treatment has been applied, etc.).

Absolute Localization in the Greenhouse

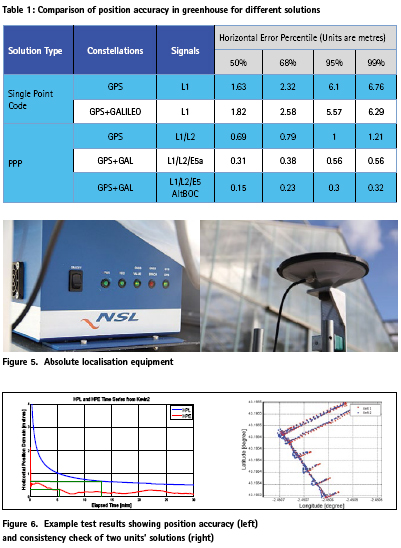

Greenhouses are challenging environments for precise GNSS positioning and navigation, with their metal-reinforced structures of glass or polycarbonate likely to cause multipath and signal blockages. To overcome this, the GREENPATROL solution has a number of novel techniques to overcome these issues and allow precise positioning (to 30cm horizontal accuracy) within the greenhouse.

To enable precise positioning without reliance on local infrastructure, the absolute localization utilizes Precise Point Positioning algorithms. A high quality GNSS receiver is used to provide multiconstellation, multi-frequency GNSS observation and navigation data, and these are processed along with real-time precise orbit and clock corrections [7] using bespoke algorithms developed by GMV NSL in order to obtain a precise solution. To allow for the fact that there may be additional noise and multipath in the greenhouse, various data quality checks and employed to identify and remove degraded data. However, the biggest improvement to positioning in this difficult environment comes from the use of the Galileo E5 AltBOC signal.

The Galileo E5 signal uses class of offset-carrier modulations known as Constant-Envelope AltBOC. The Galileo E5 signal has a sub-carrier frequency of 15×1.023 MHz and code chipping rate of 10×1.023 MHz represented as AltBOC (15,10). With this, the E5 signal offers improved performance for code tracking jitter less than 5cm even at signal strength of 35 dB-Hz [8]. Due to the code chipping rate and higher signal bandwidth, the AltBOC (15,10) also helps to eliminate the long-range multipath effects on code phase measurements [9].

As an early activity within the project, the performance of PPP at a static location in the greenhouse with different constellations and signals was assessed. The following table shows position accuracy (after convergence) for different solutions.

The results for standard single point code positioning are shown for comparison. It can be seen that the performance is worse than would be expected in open sky conditions, due to the increased level of multipath and signal blockages. Multi-constellation GPS+Galileo L1E1 performance is better than GPS alone due to the increased number of satellites, meaning that geometry is improved and identification and elimination of degraded measurements is more successful.

PPP performance (using GMV NSL software) is also shown. It can be seen that, as expected, the position accuracy is better than standard single point positioning, due to using enhanced orbit and clock messages and the use of carrier phase measurements, which suffer less from multipath. Nevertheless, the high code multipath and frequent cycle slips lead to degraded performance compared to what would be expected in open sky conditions – for GPS only (L1/L2) the 95% horizontal accuracy is 1m. The inclusion of additional satellites with Galileo E1/E5a does help to improve the solution, but by far the best performance is achieved when using E5 AltBOC for Galileo. This seems due to a combination of lower multipath and improved signal tracking (fewer cycle slips).

In addition to the use of E5 AltBOC signal, the GREENPATROL solution also uses additional measurements from other sensors to enhance the GNSS PPP solution. Odometer measurements from the robot platform and IMU data are integrated into the position engine, so allowing better mitigation of faulty measurements and also to allow continuous provision of position and heading information even if GNSS measurements are lost completely. Many different tests have been performed in open sky and greenhouse conditions to understand and assess the performance of the solution. Overall, it has been seen that the real-time position accuracy of the absolute localization solution can achieve 30cm (95%) and the heading accuracy 5 degrees (95%) in all conditions. This satisfies the system requirements to allow autonomous navigation and for geo-referencing the different actions taken by the robot.

Relative localization and navigation

The generation of maps and their use inside greenhouses poses certain problems that do not occur in other types of environments. On the one hand there is a high symmetry from the structural point of view, but on the other hand it is a very irregular environment due to the presence of the plants. In addition, the environment is very changeable over time as the plants grow, and different stages of growth carry associated big changes related to the density of the leaves. The combination of high symmetry and high irregularity results in great difficulties to detect differentiating elements (or recognizable references), which are key in a relative location system using maps. All this is aggravated by the possible dimensions of the greenhouse that make the map generators tend to warp long straight paths, where the differentiating elements known beforehand are lost sight of.

The fact that Absolute Localization feeds into the position solution of Relative Localization mitigates the problems discussed above, as it can make up for the absence of recognizable references by increasing the reliability of the positions taken as input. That is why both the algorithms used for map generation ad for localization have been configured in such a way that absolute positionrelated inputs have been given more confidence than those related to the sensors that perceive the environment.

The Relative Localization, besides performing the transformation of absolute coordinates to a relative reference frame, tries to improve the solution of position and orientation obtained by the Absolute Localization. It has been found through tests in the greenhouse that this solution is good enough so that the position cannot be improved, although sometimes the orientation can be improved, up to 30% [10]. In any case, the Relative Location acts as a safeguard in cases of loss of GNSS signal, making changes in its configuration to adapt to the circumstances.

Navigation is also affected by the previously discussed issue of plant irregularities. The combination of potentially narrow spaces, irregular elements and the need to maintain certain safety distances can lead to situations of blockage in navigation when the same configurations as in wider spaces are applied.

To solve it, an adaptive system of the navigation configuration has been chosen, which adjusts the safety distances, the dynamics of the robot’s movement and the behaviour in the face of unforeseen obstacles according to the available space perceived at each moment around the platform. With this, a navigation approach adaptable to a changing environment, fluid in open spaces and viable in narrow spaces has been achieved

Navigation missions are determined by a high-level planner integrated with the IPM system that generates alternate inspection and treatment missions. Each of these missions is composed of multiple navigation objectives that carry an associated task to be performed at the destination.

Before testing in the greenhouse, a great effort has been made to create a representative scenario for verification at simulation level for the navigation algorithms, integrated with the rest of the subsystems. Several simulated greenhouses with realistic dimensions and number of plants have been generated. The largest of them is 1,500m2 and 1,920 plants. In order to reflect the expected differences in each of the plants, an approach has been adopted in which by using the same plant 3D model, dif ferent perceptions of them are obtained by applying small randomized variations in their position, orientation and size. In this scenario, and simulating different plant grow stages, 36 different missions have been carried out involving more than 4,000 navigation targets and all have been achieved without incident.

Pest detection

As well as being able to navigate through the greenhouse, another key aspect of the GREENPATROL solution is the ability to autonomously perform the tasks that are assigned to it. The core of this is to automatically perform pest detection within the greenhouse.

There are two aspects to the pest inspection. The first is the automatic, and accurate, identification of pests. The second is the strategy for how to best monitor the greenhouse in order to detect and monitor any infestation that occurs.

For the automatic identification of pests, the GREENPATROL system uses cameras to take images of plants and uses machine learning and deep learning techniques to process the images automatically identify pests. As the plants will grow over time, it is important that the system is able to adapt to this change and so the cameras are mounted on a robotic arm that allows the cameras to be raised up and down (to cope with different height plants) and to twist to different orientations to be able to photograph both the top side and underside of leaves on both sides of the robot platform.

Within GREENPATROL, extensive work has been done on training and testing alternative machine learning and deep learning algorithms in order to determine the best approach to use for real-time detection. An extensive database of images of healthy and infected plants was populated through cultivating plants in lab conditions, as well as setting up an automatic system in a commercial greenhouse to record images in the same environmental conditions (including light levels) as would be expected for the final system.

The images are pre-processed to check for image quality, enhance features and add illumination to very dark areas, and then each image is viewed manually and pests (including eggs, larvae and adult pests) are tagged. This large dataset then provides the information to be able to develop and train the models, and to test the performance.

After investigation, a Deep Learning model was chosen for full implementation. This is because the Deep learning models were more robust than computer vision or machine learning with respect to lighting conditions, which is important for real scenarios where the light quality in the greenhouse will vary. In final testing of this model, almost 7,000 images were used for validation and it was shown that the pests were detected with 91% success rate and were correctly identified (i.e. type of pest) with 89% success rate, which fulfils the requirements set for the system. A more detailed description of the learning strategies for pest detection and identification can be found in [6].

In terms of the strategy for crop inspection to best detect and monitor infestation, there are several approaches that can be used. On the one hand it can be considered that every plant could be checked every day for signs of pests. However, this would be very time consuming and very inefficient – even for an automated solution. Alternatives include regular inspection of samples throughout the whole greenhouse, or focused inspection of particular areas that are particularly susceptible to infestation. The approach also needs to be adaptable to respond to events, for example inspection may be stepped up in areas adjacent to a section where pests have been detected to identify any spread, or there may be a return to areas where treatment has been applied to assess the effectiveness. In any case, the best strategy for a particular greenhouse will depend on things such as the size of the greenhouse, type of crop, etc. and so the GREENPATROL solution is configurable by the operator to choose the most appropriate strategy to use.

System testing

To complete the project, the GREENPATROL system has been demonstrated in the greenhouse. Unfortunately, restrictions imposed during the COVID pandemic have affected the test campaign, but nevertheless two sets of tests were performed in the greenhouse in August 2020. One set of tests has considered the localization and navigation capability, and a second set of tests checked the pest detection and treatment capability. In the localization and navigation tests, the absolute localization system (using PPP+DR and Galileo E5 AltBOC) was used in combination with the laser measurements and local map data to navigate through the greenhouse. Various routes were planned to test that the robot could navigate autonomously through the greenhouse, whilst avoiding obstacles and the plants themselves.

From these tests it was determined that the localization and navigation function was successful. The robot platform autonomously performed basic navigation manoeuvres – travelling along the main corridor and turning into side corridors – and there were no collisions with infrastructure, or plants. Additional unexpected static obstacles were detected and avoided.

Other validation tests have considered the manipulation capabilities of the robot arm, the leaf detection capabilities, the pest detection and identification capabilities, and the spraying capabilities. Various tests for inspecting and treating plants were performed using plants on both the right and left sides of the robot. The onboard cameras were used to acquire pictures of the leaves on the plants and the Deep Learning pest detection and identification model were tested.

During the tests, the robot successfully executed of simple pest inspection and treatment plans. Although there were challenges with the illumination conditions and occlusion by leaves close to the camera, and most of the obtained pictures were healthy, nevertheless some Tuta absoluta and Whitefly insects were correctly identified.

Summary and conclusions

Overall, the GREENPATROL project has demonstrated the viability of a robotic solution for autonomous detection and treatment of pests within greenhouses.

• The project has developed and demonstrated a Galileo based GNSS positioning solution with the required accuracy in the greenhouse environment

• The solution has demonstrated autonomous navigation in the greenhouse, enabled by the absolute localization solution

• The pest detection and classification ability has been developed and validated

• A new IPM strategy for scouting in the greenhouse has been developed.

Financial support

The GREENPATROL project (http:// GREENPATROL-robot.eu) has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 776324.

Bibliography

[1] UEKA, Y. & ARIMA, S., 2015. Development of Multi- Operation Robot for Productivity Enhancement of Intelligent Greenhouses: For Construction of Integrated Pest Management Technology for Intelligent Greenhouses. Environmental Control in Biology, pp. 63-70.

[2] Ebrahimi, M. A. K. M. H. M. S. &. J. B., 2017. Visionbased pest detection based on SVM classification method. Computers and Electronics in Agriculture, pp. 52-58

[3] Xia, C. C. T. S. R. Z. &. L. J. M., 2015. Automatic identification and counting of small size pests in greenhouse conditions with low computational cost. Ecological informatics, pp. 139-146.

[4] Pattinson, Tiwari, Zheng, Fryganiotis, Campo-Cossio, Arnau, Obregon, Ansuategi, Tubio, Lluvia, Rey, Verschoore, Lenza, Reyes, 2018. GNSS Precise Point Positioning for Autonomous Robot Navigation in Greenhouse Environment for Integrated Pest Monitoring. 12th Annual Baska GNSS Conference, May 2018.

[5] Berns K., v. P. E., 2009. Simultaneous localization and mapping (SLAM). In: Autonomous Land Vehicles. s.l.:Vieweg+Teubner.

[6] Gutierrez, A., Ansuategi, A., Susperregi, L., Tubío, C., Rankić, I., & Lenža, L., 2019. A Benchmarking of Learning Strategies for Pest Detection and Identification on Tomato Plants for Autonomous Scouting Robots Using Internal Databases. Journal of Sensors, 2019.

[7] Weber G., Dettmering D., Gebhard H.: Networked Transport of RTCM via Internet Protocol (NTRIP). In: Sanso F. (Ed.): A Window on the Future, Proceedings of the IAG General Assembly, Sapporo, Japan, 2003, Springer Verlag, Symposia Series, Vol. 128, p. 60-64, 2005

[8] Sleewaegen J-M, De Wilde W, Hollreiser M (2004) Galileo AltBOC receiver. In: Proceedings of ENC GNSS 2004, Rotterdam, 16–19 May 2004

[9] Shivaramaiah, N.C., “Code Phase Multipath Mitigation by Exploiting the Frequency Diversity in Galileo E5 AltBOC,” Proceedings of the 22nd International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2009), Savannah, GA, September 2009, pp. 3219-3233.

[10] D. Obregon, R. Arnau et al., Precise positioning and heading for autonomous scouting robots in a harsh environment, International Work-Conference on the Interplay Between Natural and Artificial Computation. Springer, Cham, 2019. pp. 82-96.

(2 votes, average: 3.00 out of 5)

(2 votes, average: 3.00 out of 5)

Leave your response!