| Mapping | |

Automatic detection of dead tree from UAV imagery

In this study, we propose a combination of RF and vegetation index for automatic detection of a dead tree under limited RGB sensor condition |

|

|

|

|

Recently, a number of conifers dying due to climate change are being found in high altitude areas of Korean national parks. The existing tree investigation method has been judged by the human visual interpretation. However, with Unmanned aerial vehicle (UAV) it is not only possible to measure large areas immediately but also to construct and analyze this as spatial information. In this study, we propose a method to detect the location of wilted or dead trees in UAV orthophoto. A natural monument called Yew Trees at Sobaeksan, located in the highlands of Sobaeksan national park was selected as an experimental area and an orthophoto image of a 2cm resolution was produced by UAV digital photogrammetry. Image segmentation and image classification were performed sequentially through object-based image analysis (OBIA): The orthophoto image was split by a large-scale mean-shift (LSMS) segmentation method, and a random forest algorithm was applied for classification. Each segment was identified as a dead tree, a living tree, a shadow and a bare area. As the UAV used in this study only contained an RGB sensor, the vegetation index was used as an additional feature to improve the accuracy of the dead tree detection. The normalized green-red difference index (NGRDI) applicable to the RGB sensor was chosen. All data processing was done using Free and Open Source Software for Geospatial (FOSS4G) including OpenDroneMap, OrfeoToolBox, and QGIS. Experimental results showed that the dead tree could automatically be detected from UAV imagery with a confidence level of more than 80%. We also confirmed that the limit of RGB sensor data could be complemented with the combination of random forest and vegetation index.

Introduction

Dying trees caused by climate change

In recent years, conifers have been found dying in high altitudes of national parks. As temperature increases due to climate change, conifer species favoring high altitude and colder climates are adversely affected by temperature stress (Chung et al., 2015). Recently, these symptoms began to be found in Sobaeksan National Park located in the central part and also gradually spreading in the southern part.

Survey of vegetation using UAV

Unmanned aerial vehicles (UAVs) collect successive images at low altitude at desired times. These original images can be reconstructed into ultra-high resolution spatial information (orthophoto, point cloud, DSM, textured DSM, etc.) through digital photogrammetry. The UAV has advantages over conventional satellites and aircrafts because it is quite easy to control in time and space. For ultra-high resolution images such as UAV, previous studies have showed that object-based classification can provide higher accuracy than pixelbased classification (Moon et al., 2017).

Recently, UAV has been applied in a vegetation survey. Kim et al. (2017) produced a 12 cm-resolution UAV orthophoto to detect Pine Wood Nematode damage and identified pest-infested trees. Park and Park (2015) created a crops classification map through object-based image analysis (OBIA). However, the application of UAV to the dying conifers of the highland, which is presumed to be due to the climate change, has been focused on simple aerial photography.

Purpose of this study

The purpose of this research is to propose a method to automatically detect dead or dying trees in a survey area through UAV utilization. The study also demonstrates that it is possible to conduct the vegetation investigation smoothly despite using a low-cost drone by combining the vegetation index at the OBIA stage.

Method

Experimental area

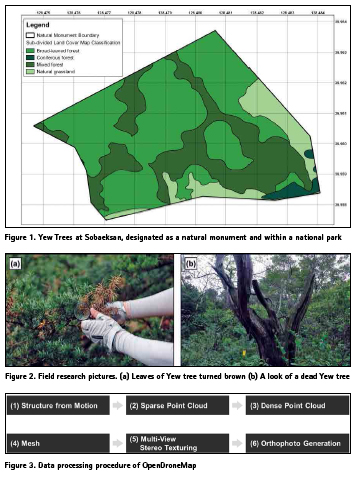

The Yew trees community located in Sobaeksan National Park was selected as the study area. This area is designated as a National Monument and is the largest Korean Yew trees habitat. It is located at a high altitude of 1,400m or more. Figure 1 shows a sub-divided land cover map produced by the Ministry of Environment on the study area.

In 2013, an overall survey of 2,046 trees in the Natural Monument boundary was conducted over approximately six months. According to the report, the average height of trees is about 7 meters, and the age of trees is estimated to be around 200 to 500 years. Old trees have shown more vulnerability towards the factors associated with climate change.

In recent years, there have been a growing number of trees either dead or dying due to recent heat waves, droughts, and high altitude winds, and their symptoms have been observed visually in the field (Figure 2). To better understand these changes in trees, Sobaeksan National Park Northern Office took its first shot with a drone on June 29, 2018.

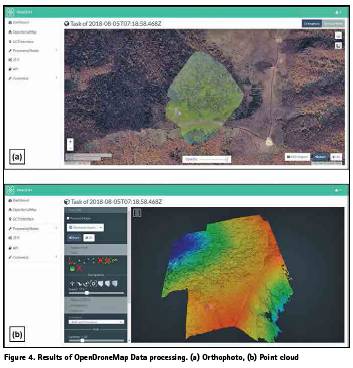

UAV digital photogrammetry

The photographs collected at the site were processed through OpenDroneMap (Benjamin et al., 2018), a type of free and open source software for geospatial (FOSS4G). OpenDroneMap is an open source toolkit for drone image processing. It supports creating spatial information such as point cloud, mesh, textured mesh, and orthophoto from unmanned photographs. Through this process, a 2cm resolution UAV orthophoto of the study area was generated.

Object-based image analysis (OBIA)

Image segmentation and image classification were performed sequentially through OBIA (Kelly et al., 2011). The UAV orthophoto was segmented into single objects using large-scale meanshift (LSMS) segmentation techniques (Michel et al., 2015). The segmentation sometimes can encounter problems due to memory limitations especially when dealing with a large size of spatial data. In this study, the LSMS handles stable segmentation by dividing the whole image into tile-wise units. As attribute values, the processed single objects have the mean and standard deviation values for the pixel values of the red, green, and blue bands.

The random forest (RF) algorithm was applied to image classification. This method has recently been used in the field of vegetation mapping based-on UAV remote sensing (Feng et al., 2015). RF is a kind of ensemble technique that is composed of a number of independent classifiers. For example, if there are five misclassifications out of the 100 classifiers, the classifier can be numerically defined to have 95% confidence. In this experiment, each object, a segment, was identified as a dead tree, a living tree, a shadow, and a bare area, and the vegetation index was also calculated to add more factors in the training phase of the classifier.

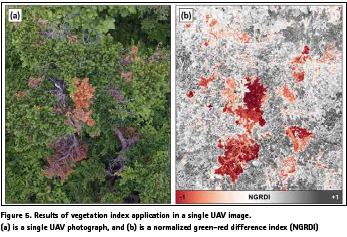

Normalized green-red difference index (NGRDI)

Vegetation index can quantitatively measure the vegetation condition and can be used as an advantageous factor to improve the accuracy of dead tree detection. Such an index is defined using the wavelength-specific reflectance of chlorophyll contained in the vegetation. The reflectance of healthy vegetation is usually higher at near-infrared wavelengths and significantly lower at red wavelengths. Vegetation indices mainly use near-infrared wavelength band, but UAV used in this study is equipped with an only RGB camera as DJI Phantom 4 model. Therefore, we calculated the normalized green-red difference index (NGRDI) applicable to RGB sensors. The equation is as follows:

![]()

In the UAV orthophoto, G and R are the radiometric normalized pixel values of the green and red bands, respectively. In a recent study (Zheng et al., 2018), the NGRGI has proven its advantageous performance in estimating the vegetation portion in the UAV orthophoto. The vegetation index using the visible wavelength bands has mainly been preferred to diagnose the condition of the crops in the agricultural field, but it is also applicable to assess the tree health (Meyer et al., 2008). The NGRDI calculation result was added as an attribute value (average, standard deviation) to each object of the image segmentation polygon and used as an additional factor for image classification.

Results and discussion

The total area of the study site is 330,000 square meters and the UAV photography was conducted in some areas where dead or dying trees were mainly observed. Figure 4 shows an orthophoto and a point cloud created by OpenDroneMap. In this experiment, we used WebODM software that processes UAV imagery based on OpenDroneMap.

The results of applying NGRDI to UAV imagery are shown in Figure 5. The broken tree was caused by strong winds and the browning is ongoing. We could confirm that NGRDI vegetation index quantitatively detect the dead trees and the color-changed trees.

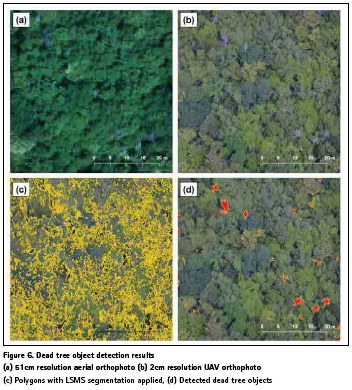

Ultra-high resolution UAV orthophoto could easily distinguish between a dead tree and living tree with visual inspection. It was compared with the case where the individual trees are not identified in the conventional aerial photo (Figure 6 (a), (b)). OBIA was implemented through the open source remote sensing library Orfeo ToolBox (Grizonnet et al., 2017) and QGIS (QGIS Development Team, 2018). Applying LSMS segmentation and RF classification, we could automatically detect thirteen damaged trees in 2,500 square meters (Fig. 6 (c), (d)). In this experiment, NGRDI, a kind of vegetation index, was used as input data in the RF classification. It was confirmed that NGRDI contributes to the reliability of dead tree detection. The dead tree could be automatically detected from UAV imagery with a confidence level of more than 80%. Related to this, accuracy assessment will be done after additional UAV survey of the study site.

Conclusion

In this study, we proposed a combination of RF and vegetation index for automatic detection of a dead tree under limited RGB sensor condition. The UAV tree survey has an advantage of observing a large area at one time compared with the visual field survey. The proposed method in this study can accurately grasp the position of a dead tree and so it is expected that will be applicable in related studies.

References

Benjamin, D., Fitzsimmons, S., Toffanin, P., 2018. OpenDroneMap [Computer software], Retrieved Sep. 1, 2018, from https://github.com/OpenDroneMap.

Chung J., Kim, H., Lee, S., Lee, K., Kim, K., Chun. Y., 2015. Correlation analysis and growth prediction between climatic elements and radial growth for Pinus koraiensis. Korean Journal of Agricultural and Forest Meteorology, 17(2), pp. 85-92.

Feng, Q., Liu, J., Gong, J., 2015. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens., 7, pp. 1074-1094.

Grizonnet, M., Michel, J., Poughon, V., Inglada, J., Savinaud, M., Cresson, R., 2017. Orfeo ToolBox: open source processing of remote sensing images. Open Geospatial Data, Software and Standards, 2:15, pp. 1-8.

Kelly, M., Blanchard, S.D., Kersten, E., Koy, K., 2011. Terrestrial remotely sensed imagery in support of public health: New avenues of research using object-based image analysis. Remote Sens., 3, pp. 2321-2345.

Kim, M.-J., Bang, H.-S., Lee, J.-W., 2017. Use of unmanned aerial vehicle for forecasting pine wood nematode in boundary area: A case study of Sejong metropolitan autonomous city. J. Korean For. Soc., 106(1), pp. 100-109.

Meyer, G.E., Neto, J.C., 2008. Verification of color vegetation indices for automated crop imaging applications. Computers and Electronics in Agriculture, 63, pp. 282–293.

Michel, J., Youssefi, D., Grizonnet, M., 2015. Stable mean-shift algorithm and its application to the segmentation of arbitrarily large remote sensing images. IEEE Transactions on Geoscience and Remote Sensing, 53(2), pp. 952-964.

Moon, H.-G., Lee, S.-M., Cha, J.-G., 2017. Land cover classification using UAV imagery and objectbased image analysis – Focusing on the Maseomyeon, Seocheon-gun, Chungcheongnam-do. Journal of the Korean Association of Geographic Information Studies, 20(1), pp. 1-14.

Park, J.K., Park, J.H., 2015. Crops classification using imagery of unmanned aerial vehicle (UAV). Journal of the Korean Society of Agricultural Engineers, 57(6), pp. 91-97.

QGIS Development Team, 2018. QGIS Geographic Information System: Open Source Geospatial Foundation Project [Computer software], Retrieved Sep. 1, 2018, from http://qgis.osgeo.org.

Zheng, H., Cheng, T., Li, D., Zhou, X, Yao, X., Tian, Y., Cao, W., Zhu, Y., 2018. Evaluation of RGB, color-infrared and multispectral images acquired from unmanned aerial systems for the estimation of Nitrogen accumulation in rice. Remote Sens. 10(824), pp. 1-17.

The paper was presented at the 39th Asian Conference on Remote Sensing, 15 – 19 October 2018, Kuala Lumpur, Malaysia.

(No Ratings Yet)

(No Ratings Yet)

Leave your response!