| GNSS | |

Technology Trends and Innovation Challenges

We are in an amazing time of technology evolution! In this feature, industry stalwarts from diverse technology domain capture the trends and share their perspectives on challenges ahead… |

The next wave of geospatial innovation

|

|

The geospatial profession is evolving. As seen in other industries, the next wave of geospatial technology innovation will be shaped by big data, cloud computing, Internet of Things (IoT), and the ubiquity of GIS. Although innovation will be widespread, when considering the most disruptive themes of 2018, we will focus on artificial intelligence, autonomous vehicles, the as-a-service business model, sensor fusion and mixed reality.

Artificial intelligence running deeper

Some of the brightest minds in education, technology and business predict artificial intelligence (AI) will change everything. AI is described by the World Economic Forum as one of the key societal advancements that will define the Fourth Industrial Revolution, a new era of technological disruption building on digitization, mobility and the global connectivity of 4 billion worldwide Internet users by 2020.

By applying the algorithms of artificial intelligence to geospatial data, machines can tell us more about our environment more quickly than humans, an advancement that will transform how we learn, work and live. For example, it is now possible to precisely document and monitor roadside infrastructure like street lamps, road signs and other assets in real time, which will become even more important as autonomous vehicles become more mainstream. When that data is interpreted using artificial intelligence, it can help close the gap between detection and correction of roadside damage to improve public safety.

Deep learning, another flavor of AI that refers to deep artificial neural networks within software that can solve problems, is improving image recognition in geospatial data. The newest version of Trimble’s image analysis software, eCognition 9.3, for example, leverages deep learning technology from the Google TensorFlow library, empowering customers with highly sophisticated pattern recognition and correlation tools that automate the classification of objects of interest for faster and more accurate results. The Trimble eCognition Palm Oil app, for example, uses leaf structure to detect palm trees in a block or on a plantation to help farmers assess the spacing and health of their trees.

Continued deployment of AI will broaden the range of geospatial data analysis and information that can be automatically extracted to improve decision making.

Autonomous vehicle ripples

Artificial intelligence also is integral to autonomous vehicles, which will rely on real-time positioning and accurate, detailed maps to negotiate urban and rural areas. Autonomous consumer vehicles in rapid development by Tesla, Google and Uber are producing technology advances that also will benefit geospatial solutions providers. These include compact, affordable infrared LiDAR systems that are aided by a microelectro- mechanical systems (MEMS) and use a rotating laser, and solid-state LiDAR systems. Applied to the geospatial industry, these components will make it cheaper and easier to maintain and calibrate equipment such as optical total stations and scanners.

Autonomous vehicle systems need highprecision data about the roadway and the assets around them, and geospatial professionals will need to capture accurate data on roadside assets for autonomous vehicle systems.

In addition, the positioning and visualization technologies that are a critical component of autonomous vehicles will expand into other transportation applications, such as freight hauling, to use Internet-connected sensors for position, temperature and other data to track the location and status of perishable cargo.

As-a-service adds flexibility

Geospatial customers are increasingly seeing geospatial solutions as a service, rather than a piece of equipment, with the as-a-service model enabling technologies, such as precise positioning, to reach more people so they can improve their workflows and projects.

The Trimble Catalyst GNSS (Geographic Navigation Satellite System) software receiver, introduced to the market last year, has transformed core GNSS technology into a subscription-based software service for mobile devices with a range of accuracy levels down to centimeter precision.

This means more people can to use their smart phones to receive the satellite data for a precise position, a technology advancement that will multiply the capability of a field organizations to increase the reach and rapidness of data collection.

Utilities are one example of the kinds of organizations that want to expand field crews in certain seasons or under various circumstances, but don’t want to invest in more hardware.

The response to positioning-as-aservice will vary by world region depending on education and comfort levels with on-demand subscriptionbased services, but early adopters and software integrators will produce more innovative, less traditional uses, such as event management, explosives disposal, golf course landscaping, trail management, irrigation consulting, farm infrastructure and address allocation.

Solutions from sensor fusion

In the past, we had a cell phone for communication, an iPod for listening to music, and a laptop for internet browsing. Now with smart phones, we have all of those in one device. A similar trend is unfolding in geospatial technology with the fusion of different kinds of sensors, whether GNSS, accelerometers, gyros, magnetometers, barometers or optical sensors.

Sensor fusion combines multiple different sensors in a way that maximizes their combined strengths and minimizes their combined weaknesses. What’s exciting currently is the growing accessibility of the technology through advances in control technology and computation, and sensor miniaturization, which will have an impact across all industries, particularly in guidance systems used in autonomous vehicles and mobile mapping systems. The combination of different sensor technologies also will help solve geospatial problems including position availability, system usability and update rates, especially as advances on all sensor fronts helps bring down costs.

Modeling new realities

Mixed reality, which integrates digital content with the physical environment to provide an enriched perspective of those assets coexisting, is providing powerful tool for surveyors, architects, contractors, engineers, and clients by removing the constraints of 2D screens.

Mixed reality technologies accelerate design work, allow stakeholders to experience designs in an environmental context, and enable decisions at inception. The technology requires both hardware, such as Microsoft HoloLens or DAQRI Smart Helmet, and software, such as Trimble SketchUp Viewer for HoloLens and Trimble Connect.

Emerging mixed reality technology will transform GIS by placing visual assets where they reside in a database mapped by a surveyor to improve the decision-making process, such as where to dig safely around underground utilities. While still in the pilot phase, Trimble SiteVision, which combines Trimble Catalyst with Google Tango technology, is one example of a proven solution that makes mixed reality efficient and accessible for everyday use.

Geospatial technology also will play an increasingly important role in Building Information Modeling (BIM), which offers strong capabilities for modeling and visualization. The building construction industry is filled with opportunities for improved efficiency and productivity through BIM, which also is expanding into civil infrastructure, utilities, power stations and industrial facilities. With close collaboration among project stakeholders as one of the key benefits of BIM, cloudbased services and new software tools for visualization will increase BIM’s efficiency and effectiveness over time.

GPS and vehicle signal simulation in automotive testing

|

|

Complex vehicle safety and autonomous systems naturally require test and validation of the multiple data streams that feed them. As ADAS edges towards its development apogee, and with truly autonomous vehicles becoming a reality, the testing of these systems will require some lateral thinking to keep the development cycle under control. Any work that can be undertaken on the bench rather than the test track can reduce time and fiscal overheads.

Because they are in command of them, a manufacturer should be able to recreate, on the test bench, all of the control signals required by autonomous systems and infotainment. Except, that is, for GPS. This is a problem if the system under test requires a satellite fix – how do you go about bench-testing when the only way to get it is to go outside?

GPS simulation may not be a process that many vehicle test and development engineers have come across, or researched. It is, however, a method that has been used in the development of GPS-enabled products for as long as there has been a usable civilian signal.

In 2008, Racelogic – who for more than a decade have been supplying GPS-based test systems to vehicle and tyre manufacturers – needed a device capable of generating a realistic satellite signal to aid in the development of their high-accuracy VBOX data loggers. Consequently they created LabSat, which records raw, ‘live-sky’ RF satellite signals for later replay, giving their engineers and production staff an easy and accessible method of putting all of their data logging equipment through a repeatable and consistent bench-test regime.

Traditional simulators had been regarded as a very expensive luxury, which was largely the case; but by employing a record and replay technique and being far more reasonably priced, LabSat quickly established an enviable reputation for using ‘real’ GPS signals that engineers valued. As a result, a number of chip and mobile phone manufacturers are increasingly placing a LabSat on each of their developer ’s desks, favouring the one button start/ stop functionality that enables them to concentrate on the test results rather than managing the simulator.

The reproduction of GPS signals allows for such parameters as receiver sensitivity, signal acquisition, and firmware consistency to be developed and then tested in the laboratory. The immediately obvious benefit is one of absolute convenience – but it isn’t just this that makes the case for GPS simulation so compelling: consistency and repeatability are vital. A simulator can replay, as many times as required, such parameters as signal strength, satellite almanac, obscuration, and multipath signal reflection. This means that in comparison to outdoor testing where conditions will vary, a new system can be lab-developed and edge cases tested time and time again in the knowledge that the signal input is always the same.

As the number of satellite constellations continues to grow, there is a requirement to ensure that each set of signals can be utilised: as well as the American GPS network there is also Russian GLONASS with worldwide coverage; Chinese BeiDou with Asian coverage currently but with global intentions; Japanonly QZSS; and local SBAS services (Satellite-Based Augmentation Systems). Using a LabSat it is possible to test with a combination of some or all of these signals simultaneously, ensuring that the device is capable of global operation.

Recording raw RF GPS data means that when it is replayed it is entirely realistic: in other words it will replicate the issues commonly encountered when making a journey on public highways – such as multipath or just plain loss of satellite reception due to obscuration from buildings or trees. The vehicle systems can therefore be tested against these environments and adjusted accordingly. However if a ‘pure’ simulation is required, it can be created in the SatGen software which produces custom scenarios for any kind of dynamic, length of time, or location. One of the advantages in using a generated scenario like this is the ability to replay data that would be dangerous to attempt on a live track – customisable velocity and acceleration allows for system testing outside of sensible driving parameters.

The LabSat is now employed in a wide variety of industries, and given Racelogic’s existing client base within the automotive sector it was no surprise that it found favour with a number of vehicle manufacturers, needing to integrate various signal streams, in developing their navigation systems. Most inbuilt satnav units rely on inertial input as well as that of GPS so that they can continue to operate in areas where the sky is no longer visible (tunnels) or is partly obscured (the ‘urban canyon’ phenomenon).

A simulator or record/replay system needs to be able to replicate the multiple signals that enable the development of dead-reckoning units if it is to be considered as a companion to traditional outdoor, on-track development. A DR system and connected vehicle sensors can be bench-tested by employing a LabSat turntable to replicate vehicle movement and the LabSat can also reproduce previously recorded vehicle CAN, synchronised to within 60ns of the GPS data, as well as serial and digital inputs. There is plenty of scope for those wishing to evaluate their ADAS and autonomous signal streams in conjunction with that of GPS.

Most GPS-enabled products can be developed and tested using signals that have been recorded or generated with a timestamp from the past. But there are some applications which require a current satellite time signal – such as testing low-dynamic Hardware in the Loop systems – and to this end the forthcoming LabSat RT can produce realtime output. With a latency of only one second, GPS data can be fed into a device under test with the current satellite timestamp – a necessity for anything connected to the internet or reporting back to a central server, for instance – with implications for the development of connected cars in the future.

An amazing period for real time GNSS positioning

|

|

Robust positioning in a lot of challenging environments is at the heart of SBG Systems DNA for now more than 10 years. We are at the forefront of Inertial Navigation Systems, fusing motion sensors and GNSS signals to enable continuous mobile positioning for innovative markets such as robotics, surveying, driverless car navigation, defense, etc. Our customers are coming from such different industries, all over the globe, with both real time and postprocessing needs. At the core of those exceptional changes is our industry, we can see two huge trends: a phenomenal expectation on real time positioning accuracy and reliability, and an increasing need of accurate 3D maps.

Professionals working on real time GNSS positioning are facing an amazing period with the modernization of current constellations and the arrival of a new promising one: GALILEO. This newborn delivers higher data availability and comes with very high compatibility with the existing constellations, promising a higher performance when mixing GALILEO signals with GPS, GLONASS, or BEIDOU. This great opportunity comes with the challenge of how to handle these new GALILEO signals and best mix them with the existing ones to obtain the best performance in all conditions. All the algorithm design teams face a total new constellation with lots of parameters to handle, synchronise, correct, and compute.

GALILEO is part of the other big advancement in real-time GNSS: the enhancement of the Precise Point Positioning (PPP) technology with the goal of a centimeter-level position. Getting rid of infrastructure and reaching the centimeter-level accuracy with a shorten fixing time is a dream for many integrators and could reduce the time on market of several projects.

If the arrival of GALILEO and the improvement of the PPP technology are amazing opportunities, some markets relying on the GNSS technology will continue to face technical challenges. When we speak about real-time performance in the GNSS industry, we talk about precision, availability but also reliability.

Reliability is the key of markets based on real-time GNSS positioning. Lots of outdoor human tasks tend to go unmanned such as agriculture, surveying, or driving. Positioning is the core of these systems, and GNSS is only one of the solutions. Even if the availability of satellite is greatly increasing, there will always be obstructions between the mobile and the GNSS signal. The GNSS signal cut could be due to masks such as urban canyons, forest, mountains, tunnels, or intentional spoofing. GNSS integrators need to find the right additional technology that will ensure robustness in their navigation solutions, at the right size and price. They will even need more than one sensor to ensure safety and will rely on aiding equipment such as LiDARs, odometer, vision, and inertial navigation systems. The challenge here is to take the best of each technology. This is another big task for the algorithm design team. How to best handle positioning in areas where GNSS is disturbed by taking into account those aiding technologies, the platform, the conditions of use, etc. Intelligence is the most valuable element in the positioning chain. For example, the tight integration of GNSS with Inertial Navigation System (INS) is a great way to improve robustness by deeply taking into account all the parameters of the GNSS fix (ephemerides, number of satellites, etc.) with the raw inertial data. Moreover, filtering can integrate odometer aiding data when the application is car-based, LiDAR when obstacles are close to the equipment, etc. This complex computation results in a more precise and robust realtime trajectory, consequently improving the reliability of the overall solution.

Additional challenges are appearing in the driverless car industry: the need of miniaturized sensors, volume, and price huge drop. Their searches for safety could conduct them to rely on several sensors. But what could be the budget of safety? Is the technology ready enough?

If the driverless car industry is still quite in the research and test phase, this market is a good field for implementing the best of the positioning technology while increasing the need of more detailed and precise maps.

From taking manually one point at a time to design 2D map to collecting the full environment with thousands of 3D points using vehicle-based surveying solution, cartographic applications have been revolutionised. Data are now collected on site, and then sent directly to the office team, located anywhere on earth and ready to post-process them. The need of more precise geographical data coincides with new abilities to collect them in large volume. This enhanced workflow encourages the use of post-processing to obtain higher accuracy and solve some of the issues encountered on the field. This “forwardbackward” algorithm uses past as well as future registered positions (from GNSS, Inertial and other sources) to increase the accuracy of the current position. This higher level of performance could save hours of data treatment. This also enables today’s new extremely portable systems based on UAV LiDARs data.

Whether the use of GNSS positioning is real-time or post-processed, this technology is more and more used in consumer applications such as Internet of Things, Artificial Intelligence, Automation, Virtual Reality, etc. Integrators are specialized in their industries, not in positioning. They are searching for powerful and easy-tointegrate solutions, even plug and play. The challenge here is to democratize the most complex positioning solution by adding User Experience (UX) design in all positioning technologies developments as well as algorithms that will help and even compensate some of human mistakes. For instance, inertial sensors need to be installed properly, with a specific alignment. If there is an issue in the installation, the embedded algorithm will be able to correct all the data, so that the overall equipment can work.

GNSS technology promises a lot of improvements in term of accuracy and availability. Yet, there is a lot of work to be done in algorithm design to fully benefit from it; and another effort will be necessary to complete GNSS technology with other sensors to maintain the best performance in all conditions. But is the biggest challenge to make it easy-to-use and affordable?

Photogrammetric drones are innovative tools

|

|

The vast world of geomatics is strongly impacted by new technologies of production and digital data processing. These make it possible to describe the territory and to determine geographical positions using GNSS satellite positioning. Photogrammetric drones are innovative tools that have a great influence on this universe. Indeed, these UAVs prove to be much more efficient than the survey methods traditionally used by surveyors. They bring a real time and money saving to the operators, but also guarantee them more safety. Intelligent drones are increasingly meeting the needs of measurement professionals.

Thanks to their automation and the development of Real Time Kinematic (RTK) technology, they can map largescale scenes very quickly and with very high accuracy in real time. Then, these can be reconstructed in 3D through point clouds, orthophotographs, or digital terrain models (DTMs). Many trends are spreading in the field of geomatics. For example, there is bathymetry which is the science that determines the topography of the seabed. Indeed, drones are increasingly used in the measurement of underwater depths. In addition, one of the major challenges currently facing geomatics companies is lasergrammetry. This technology occurs where photogrammetry has limitations. Using a laser device called LiDAR, embedded on a drone, it is possible to calculate the distance between a point A and a point B with centimetric precision. The aerial, terrestrial or aquatic lasergrammetry brings very important gains. These gains are of several orders. The first concerns the possibility of scanning smooth and complex shapes as there are many in our urban worlds. LiDAR precision also allows you to scan very thin objects such as high voltage lines or railroad tracks (what photogrammetry can not traditionally do). The second significant point is the reduction of treatment times by a factor of 10 compared to photogrammetry. Indeed, if several hours of processing are necessary in photogrammetry LiDAR data are almost real time. Last point, LiDAR information is smart, thanks to the multi echo. For example, it is possible to obtain a first classification between vegetation and soil, which for the world forestry is very useful.

In conclusion, to make faster, more precise and safer with drones, here is the real revolution for the world geomatics. On the other hand, the LiDAR, very use on autonomous cars of the future to see and move, is already very present to scan and measured in our world of the Survey. Decidedly we live a very rich time in major innovations.

Navigating the fourth industrial revolution

|

|

Wider technological advances have seen the geospatial industry strengthen in recent decades, with disruptors such as real-time kinetics (RTK) transforming data collection. Alongside other revolutionary developments in technology, such as artificial intelligence, robotics and automation, many believe that the world is now entering what is known as the fourth industrial revolution (4IR). With greater connectivity and improved processes expected thanks to these innovative technologies, professionals across a variety of verticals will be able to gather more in-depth insights at a faster rate and make real-time decisions.

Agile and automated

While the 4IR movement is still in its infancy, these technological innovations have already started to have a significant impact on the geospatial industry, with robotics, and unmanned aerial vehicles (UAVs), working with other devices to improve automation and make processes more efficient than ever before. For example, operators and service providers can now be better connected to their customers by using more diverse data sources to update maps, while agile development methodologies are facilitating shorter innovation and production cycles to deliver products more quickly to market. Indeed, we’ve seen evidence of this both at senseFly and across the industry, with new technology projects released every year.

As a business active in a wide range of sectors, we’ve learned how important it is to remain at the forefront of technological developments and be on the pulse of market changes to meet the needs of our customers. At the same time, technological innovations—especially the software that forms a major part of our products—enable us to improve our products and service offering on an ongoing basis. We provide continuous updates and support, which allow customers to benefit from innovative features without having to invest in new hardware. Strategic partnerships, such as those senseFly has created with software providers like Pix4D, AirMap and Airware, help to facilitate this, offering an end-to-end, connected drone solution that makes processes more automated, integrated and efficient, which ultimately improves business operations.

Refining technologies

While automation is, to some extent, already integrated into a number of verticals and processes, high accuracy Global Positioning Systems (GPS) such as RTK will increase its possibilities. For instance, the automotive industry is already using geospatial data and taking a collaborative approach to develop autonomous vehicles—a trend mirrored in the drone industry. Integrated UAV solutions are utilising this data to enhance UAV surveying and mapping capabilities, from automated flight planning and execution, to sensors able to visually recognise obstacles and objects during flight. Other emerging technologies, such as artificial intelligence, Big Data and the Internet of Things (IoT), are also continuously being refined. It is clear, however, that the convergence of these technologies may revolutionise the future of rapidly evolving sectors like the automotive, drone and electronic industries, with Big Data set to offer more precise, accurate processes and inform decision-making.

The future of geospatial

Innovation and disruption are at the core of most major advances, and, when successful, have the power to change the future of an industry. While these developments clearly offer significant promise for businesses in geospatial and their customers, it is essential that we as an industry evolve in line with the fastchanging nature of these technologies. Balancing the need to improve products’ efficiency and performance with safety will be crucial. For instance, at senseFly, a robust, well-trained workforce has proven to have significant, tangible benefits on our ability to adapt to, and work with, emerging technologies. This talent enables us to remain on the cutting edge of the latest technological advances and everchanging regulatory landscape, ensuring we are always ready to use these insights to better meet our customers’ needs. As regulations become clearer and drone technology becomes simpler and more integrated with innovation, we anticipate that such pioneering advances will support more widespread adoption of drone technology, and enable the UAV industry to continue its upward trajectory.

It is a doable task to make an earthquake prediction tool

|

|

Today, it is well established and statistically proven that there is a strong correlation between earthquakes and ionospheric disturbances, which can be observed in particular in electron density NmF2 and GNSS TEC. The first time such correlations between earthquakes and ionospheric anomalies which preceded them were described was by K.Davies and D.M.Baker in 1965 in relation to the big Alaska earthquake of 1964. However, even today these precursors are not used. The largest most recent Tohoku earthquake in Japan in 2011 had distinct precursors in the ionosphere three days in advance, which could be observed, for example, from www.ips.gov.au website, specifically on Low and Mid Latitude 30-day disturbance index plots, where the indexes went off the 10 TECU scale for three days in a row prior to the earthquake.

The main issue with these precursors today is that the amount of data and quality of methods of analysis are not sufficient to utilize these precursors with economic efficiency. In order to use them we have to be able not only to predict that the major earthquake is going to happen in the next couple of days, but to pinpoint it with certain accuracy and estimate its amplitude, in order that the related agencies could justify acting upon these predictions.

The financial benefit should be noticeable, i.e. to act in advance should cost less than to mitigate the results of these events afterwards. If one can predict an earthquake only with relative probability and without knowing the precise location, then the financial losses incurred in acting upon this information will be greater than losses incurred in neglecting the warning, and as a result of such neglect lives could be lost. We hope that in the not-so-distant future humankind will be able to predict earthquakes with certainty by monitoring the Earth’s ionosphere. To predict an earthquake means to be able to estimate in advance with given probability time, place and magnitude of the earthquake.

Needless to say, that the golden era of ionospheric research has passed decades ago, leaving us with tremendous advances in radio, navigation and understanding of the Earth’s atmosphere. With new technological trends we can get back to more scrupulous research, as means of data collection such as GNSS receivers with functions of TEC measurements and scintillation monitoring can be used not only by any university, but also individual scientists.

The new technology trends would permit better data exchange and availability and better means of data analysis. More efficient and diverse algorithms for data processing can be implemented on cloud using clusters working on digitized intermediate frequency (DIF) signals as well as on raw data, which would allow for much deeper analysis. The development of artificial intelligence brought us algorithms, which can discern patterns and correlations in large amounts of data on a level which was previously unimaginable.

I think it is one of the really important challenges and based on my knowledge, it is a doable task to make an earthquake prediction tool which will be monitoring ionospheric conditions by constantly taking measurements from GNSS sensor networks. Today we can use GPS, GLONASS, QZSS, Galileo, BeiDou and IRNSS satellites in L1, L2, L5 and S frequency bands, put these data on the Internet and process them with AI algorithms. We already have a significant amount of raw data from over a thousand stations from networks, such as IGS and GSI, occultation experiments such as CHAMP/GRACE and various data assimilation methods, however only raw data, such as various GNSS observables can currently be collected and processed.

There are no GNSS networks today which can provide DIF data and not so many tools similar to the tool we have developed to process such data with sufficient accuracy and efficiency. There is a need today for such GNSS networks which would allow scientists and researchers to access signals in DIF format from GNSS satellites on all frequencies, to advance further in atmospheric research, ultimately to predict short- and long-term weather anomalies and natural disasters, such as earthquakes in particular.

LiDAR has crucial role in driverless automobiles

|

|

Technology, as a whole, rapidly changes and shifts daily with new and exciting developments constantly being introduced. These major shifts, not only in our industry but in our day-to-day lives, have been coming from new technological developments such as the Internet of Things (IoT), Artificial Intelligence (AI), Automation, Robotics, Driverless Automobiles, Wearables, Nano-Tech, Big Data, 3D Printing, Crowd Monitoring, Cloud, and AR/ VR/MR, amongst many others. The influence of these quickly growing technologies can be, and has been, felt in many aspects throughout the industry, as well as our company personally.

With our focus on providing the most cuttingedge pulsed time-of-flight LiDAR technology in airborne, mobile, terrestrial, industrial, and unmanned laser scanning solutions, we constantly have to stay aware and current with all of the shifts and changes that occur with technology. In order for us to provide the most groundbreaking solutions for our users, to develop the technologies needed to push into new markets and to stay on the forefront of revolutionary systems and solutions, we have to acknowledge the new technologies arising, adopt and work with the ones that apply to our technologies, users, markets and applications and then adapt to the ones that affect our lives.

One of the best examples of this is how we work with the Internet of Things and the Cloud in a symbiotic cycle. With the advanced connectivity capabilities of our laser scanners, they can contribute to the Internet of Things as a part of the network of devices that are embedded with electronics, software, sensors, and network connectivity that allow them to connect and exchange data From that point, this data collected from our scanners can easily be uploaded into the Cloud for users worldwide to access remotely, use remotely, and view remotely. This is just one example of how the influence of these technological developments currently affect us directly.

One of the founding units that our company developed, manufactured, and distributed for the industrial sector has been used to aid and assist in automation at factories for years. These distance meters still are able to be used to help with current automation processes and to develop better workflows to make the processes simpler, more efficient and safer.

As for robotics and LiDAR, our LiDAR sensors have been integrated into robotic units to allow for the safety of the human user while guaranteeing the highest accuracy possible of acquired data in hard-to-reach or generally unsafe areas. This same principle also currently applies to another burgeoning technology that is rapidly expanding: unmanned technology: this growing sector also is causing a lot of technologies to grow, adapt, and change to meet the needs of the people. LiDAR itself has also become a highly visible and prevalent component in aiding and assisting the promotion and development of driverless automobiles. LiDAR serves a crucial role in driverless automobiles, as the detection and ranging that it provides allows these cars to essentially have a “driver” able to detect distance from objects, map the world around the car, and provide them a safe experience on the road.

As for technological trends and innovation challenges that we anticipate seeing, one of the ones we would like to continue to see and that we are seeing is a growing need for LiDAR and LiDAR services in many markets and applications to help fuel the fire for these new technologies. One of the things that LiDAR helps to provide is a basis for these new, emerging technologies to flourish and grow. The data that we are able to collect with LiDAR helps to further the development of these up-and-coming technologies and will additionally help future technological advancements to emerge, as well.

Technology is constantly changing. It is exciting to watch as the world shifts and it’s exciting for us to grow with these changes. It presents fantastic opportunities for us to tackle new challenges, try new things, and to expand into new markets, applications, and fields. As new technologies emerge, it is up to us to figure out what role it plays in our day-today in our lives, as well as our business.

Vulnerability mitigation strategies for GNSS-based PNT applications

|

|

Hikers, skippers and taxi drivers – everyone uses and blindly trusts GNSS today, and this dependence will likely increase in the future.

But besides personal users who benefit from satellite-based navigation applications, GNSS has also been woven deeply into the fabric of critical technology that is used across many industries today: GNSSbased time plays a key role in the operation of communication networks, financial systems or power grids.

As commercial GNSS applications are becoming more complex and fastpaced, technical change is now a constant companion. Assessing the risks and developing a mitigation strategy for GNSS-based applications are no easy tasks, yet developers, owners and operators of many GNSS-based applications must implement mitigation strategies into their products or systems to address potential vulnerabilities.

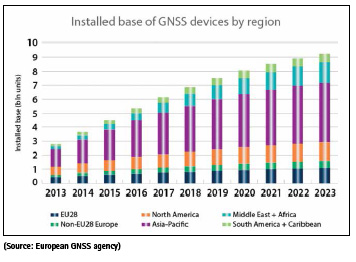

GNSS by the numbers

▪ 7 billion GNSS devices are predicted to be in use worldwide by 2022 (a 9% annual increase).

▪ 16 industries have been identified by the U.S. Department of Homeland Security as critical to the U.S. infrastructure:

▫ Chemical sector

▫ Commercial facilities

▫ Communications sector

▫ Critical manufacturing

▫ Dams sector

▫ Defense industrial base

▫ Emergency services

▫ Energy sector

▫ Financial services

▫ Food & agriculture

▫ Government facilities

▫ Health & public health

▫ Information technology

▫ Nuclear reactors, materials & waste

▫ Transportation system

▫ Water & wastewater

▪ 5 performance parameters are key to applications considered liability or safety-critical, according to the European GNSS agency: Integrity, continuity, robustness, availability, and accuracy.

▪ 1.5 microseconds network synchronization accuracy is a frequent requirement for the Telecom industry is required to maintain a network synchronization accuracy of 1.5 us (Source: ITU Recommendation G.8271/Y.1366 (07/16))

▪ 100 microseconds is the time synchronization accuracy requirement by MiFID II for the financial market in Europe.

▪ Human dependency on GNSS will continue to increase, with ever-more technology trends relying on GNSS: Big data, fast data, autonomous transportation (ships, trucks), driverless car, Smart Factory, Industry 4.0

Understanding GNSS’ vulnerabilities

In the context of today’s heavy reliance on GNSS for PNT purposes, the vulnerabilities of GNSS signals are becoming increasingly relevant. To better understand the threats against GNSS, its vulnerabilities are typically divided into two categories: jamming and spoofing.

Jamming

Jamming generally refers to intentional interference by means of a radio-frequency signal. Interference sometimes is used in the context of natural causes such as atmospheric phenomena. The effect is the same for both: The ability of the GNSS receiver to extract GNSS signal information from the background noise is impaired or rendered impossible.

There also is unintentional jamming: RF transmitters bleeding into the GNSS frequency bands are often the source of this. Illegal consumer-grade GPS jammers (also referred to as Personal Privacy Devices, or PPDs) fall under the category of intentional jamming, even though they typically target a different receiver. For example, nearby stationary receivers are “merely” collateral victims of the jamming event. These devices may become more popular over time as public concern over personal privacy grows.

Spoofing

“Spoofing” is the act of broadcasting false signals with the intent of deceiving a GNSS receiver into accepting the false signals as genuine.

From a technical perspective, spoofing GNSS receivers is more challenging than jamming, but the consequences are more severe because the receiver uses the manipulated signals for PNT calculations (rather than rejecting it), and neither the system nor the operator realize that the indicated PNT data has been corrupted. Spoofing can therefore relocate the receiver, which is not possible with jamming.

Spoofing is not an easy undertaking, especially if the spoofing target is moving fast. But it has been done, and the threat is considered real – and growing – by both government departments and private critical infrastructure operators.

Interference Detection and Mitigation (IDM)

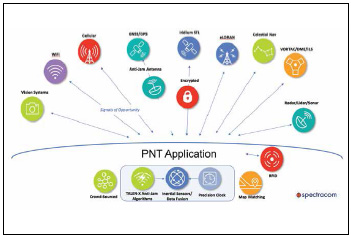

So what makes a PNT application resilient against spoofing and jamming? The answer is best illustrated by the image below which depicts the different technologies that can be used to augment a PNT solution to obtain a technically well-diversified selection of alternative PNT sources.

The overarching objective in the selection of augmentation sources should always be a mix of different technologies and different platforms; e.g., terrestrial vs. space-based, microwave vs. long wave, etc., thereby decreasing the likelihood that an interference impacts more than one PNT source.

Protecting against Interference

The objective here is to prevent the unwanted or corrupted signals and data from entering the system in the first place. This can be accomplished by using electrically steerable directional antennas, which are also called Controlled Reception Pattern antennas (CRPA), or “smart” antennas. Another approach to protect a GNSS receiver against jamming is the horizon-blocking antenna. This type of antenna will reject signals transmitted near the horizon, because these are more likely to be ground-based jammers.

Detecting Interference

Next to protecting against interference e.g., by using “smart” antennas, or horizonblocking antennas, the prompt and correct detection of an interference event is key to alerting the user(s) of a GNSSbased PNT system about the presence of a threat. GNSS receivers are increasingly often equipped with jamming-detection functionality that will inform the user of a jamming/denial situation, offering at least the option of quicker diagnostics in the event of an unexpected signal loss. Also, multi-GNSS chipsets in modern receivers often offer integrity monitoring between GNSS systems. However, the level of monitoring capability varies widely and system integrators often have little control over these settings.

A relatively new approach to detecting jamming or spoofing attacks is a software that monitors the GPS signal frequency band by applying error detection algorithms: Spectracom’s SecureSync platform now supports Talen-X’s BroadShield software that can detect if the GNSS receiver is being spoofed and, in the event of a signal loss, can provide valuable information as to why the signal has been lost.

The software’s algorithms can discern a jamming event caused by natural events that cause the signal to weaken. If a monitoring signal threshold value is exceeded, the SecureSync time server will emit an alarm and invalidate the GPS reference before it can pollute the internal time base. Now, when SecureSync transitions into Holdover, it “flywheels” on the previous pure reference signal.

Another software-based approach is the reference monitoring software: This type of software can run on time servers, which, next to GNSS, have additional timing reference inputs, such as an IRIG input and/or a PTP reference. The software will compare these references continuously: If the GNSS reference exceeds a softwaredetermined phase error-based validity threshold value, which suggests a jamming or spoofing event, the software will automatically issue an alarm.

Mitigating Interference

Interference mitigation is the second aspect of the IDM approach: Isolate the unwanted signal, then quickly reject and replace it, causing minimal system degradation. In essence, this involves the use of augmentation technologies and diversification strategies to supplement GPS/GNSS, thus reducing the dependence on it.

Another well-established technical approach to deal with temporary loss of GNSS signals is holdover solutions, such as oscillators for timing, and Inertial Navigation Systems (INS) for navigation systems.

Signals of Opportunity, such as WiFi and cellular signals, also often fall into the crowdsource category, since these opportune signals are often “borrowed” from systems that were not designed for PNT purposes.

A new mitigation technology is a system called STL (Satellite Time and Location): STL provides an alternate space-based PNT source to the GPS constellation. It re-purposes the Iridium system’s pager channels to provide a PNT signal accessible by terrestrial receivers.

This system is operational and available today. Because the satellites orbit Earth on a much closer orbit, the signal is >30 dB stronger than GNSS signals. The signal can, in fact, be received indoors, as it is a much narrower band signal than a standard Iridium comm channel, and it is more resistant to jamming and interference. The STL signal is encrypted; users are assigned a subscriber key to decrypt the signal.

Conclusion

Solutions to make PNT systems resilient against vulnerabilities are continually evolving, but detection and mitigation technologies exist today that can be used in combination with GNSS-based PNT equipment, allowing us to continue to rely on trusted GNSS as the main source for positioning, navigation and timing.

(No Ratings Yet)

(No Ratings Yet)

Leave your response!