| Surveying | |

Review of the 3D Modelling Algorithms and Crowdsourcing

This study presents an evaluation of the current state of the art of algorithms and techniques used for 3D modelling and investigates the potential of their usage for 3D cadastre. We present this paper in two parts. In this issue, the progress realated to utilize VGI data in visualizing the 3D world is presented. The algorithms and techniques in 3D reconstruction which may be used to provide accurate and detailed 3D models are also discussed. In the next issue, the potential of using VGI data in 3D reconstruction procedures, indicating the advantages and disadvantages of this approach will be published in addition to the potential of using VGI data for 3D cadastral surveys. |

|

|

|

|

|

|

Image-based 3D reconstruction of buildings and architectural monuments is a well-studied problem in photogrammetry and computer vision. Traditional photogrammetric mapping has been in the realm of industrial organizations collecting imagery from the air and developing maps and perhaps 3D information of urban spaces from these images (Leberl, 2010). In recent years, Volunteered Geographic Information (VGI) became popular, where VGI describes how an ever-expanding range of users collaboratively collects geographic data (Goodchild 2007a). Each volunteer acts like a remote sensor (Goodchild 2007b) by creating and sharing geographic data in a Web 2.0 community and thus, a comprehensive data source is created. Modern practices and requirements aim to the development of a VGI geo-data future and Internet-based automated photogrammetric solutions, in order to describe in detail and model the 3D world. Thus crowd and each internet-user may be defined as potential neo-photogrammetrists (Leberl, 2010).

As cities expand vertically, safeguarding of tenure requires a clear 3D picture in terms of property rights, restrictions and responsibilities. Current research in this field includes integration of the 3rd dimension to the traditional form of a 2D cadastre, the adoption of automated and low-cost but reliable procedures for cadastral surveys and data processing, the use of modern IT tools and m-services for cadastral data acquisition, as well as the integration of the “time” parameter in the cadastre. Much experience has already been accumulated on how a 3D Cadastre should be best developed. 3D cadaster has been attracting researchers throughout the world but 3D cadastral technology is newly emerging (Dimopoulou et al., 2016). The implementation of 3D cadastre may be accelerated utilizing the experience gained from 3D building reconstruction using crowdsourced data.

This paper presents a literature research reviewing the progress in techniques, methods and algorithms for 3D model reconstruction, as well as the experience gained from the use of crowdsourced data in 3D building reconstruction, and investigates the potential of combining this experience to design low-cost 3D cadastral surveys in urban and suburban areas. The main contribution of this paper is to identify the appropriate 3D reconstruction methods and techniques, introducing 3D-VGI data collection in order to develop a Fit-For-Purpose (FFP) 3D cadastre where the necessary cadastral surveys may be conducted in a fast, reliable and affordable way. A theoretical framework for how this can be achieved, is proposed.

VGI data in visualizing the 3D world

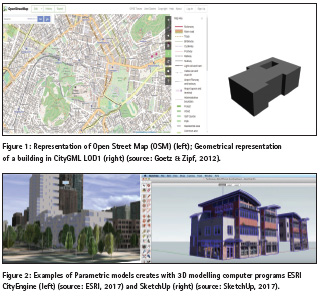

During the last decades research in the field of VGI attracted a grown interest, resulting in the development of a large amount of 3D real world applications. One of the most successful and popular VGI projects started in 2004 in England is the OpenStreetMap (Figure 1 left) (OSM, 2016). Until now, there are more than two million registered members who contribute to the rapid growth of OSM (Fan & Zipf, 2016). A subsequent research by Over et al. (2010) investigated the possibility of creating a 3D virtual world by using OSM data for various applications, and concluded that OSM has a huge potential in fulfilling the requirements of CityGML LOD1 (Gröger et al. 2008), that is block models (Figure 1 right). Several years ago, Goetz & Zipf (2012) presented a framework for automatic VGI-based creation of 3D building models encoded as standardized CityGML model. The basic idea is the correlation between properties and classes of OSM and CityGML, respectively. The investigation concluded that it is possible to create LOD1 and LOD2 but not LOD3 and LOD4. The development of an algorithm to automate the process and manage the incorrect input data using several assumptions, is of a great necessity for the success of the proposed framework.

The earliest and most prominent example of encouraging the crowd to generate spatial 3D information is Google 3D Warehouse launched on April 24, 2006. This shared repository contains user-generated 3D models of both geo-referenced real-world objects, such as churches or stadiums, and non-geo-referenced prototypical objects, such as trees, light posts or interior objects like furniture. Users must have a certain level of 3D modelling skill in order to voluntarily contribute (Uden & Zipf, 2013). The recent development of 3D modelling software such as SketchUp and ESRI CityEngine (Figure 2) that makes 3D editing more easy and effective leads to an increase of 3D building modelling production. In about 2007, Microsoft Virtual Earth and Google Earth integrated VGI and crowdsourcing techniques in their projects. 3DVIA (Virtual Earth) and Building Maker (Google Earth), provide a model kit to create buildings. Both of them exploit a set of oblique (and proprietary) birds-eye images of the same object from different perspectives, in order to derive the 3D geometry. The latter ones aim to create geo-referenced 3D building models and refer to people who do not have technical skills in 3D modelling, but still want to contribute (Fan & Zipf, 2016). Even though the above mentioned projects are based on collaboratively collected data, they are far away from been open source or open data. Hence, there are several free-to-use 3D object repositories on the internet, such as OpenSceneryX6, Archive3D7 or Shapeways8. Their contents usually lack connection to the real world but can nonetheless be useful to enrich real 3D city model visualisations (Uden & Zipf, 2013).

A 3D reconstruction can also be performed using 2D vectors and images derived from crowdsourcing strategies (Spanò et al., 2016). Over the years several projects appeared that generate and visualize 3D buildings from OSM; OSM-3D, OSM Buildings, Glosm, OSM2World, KOSMOS Worldflier, etc., are some of the most popular projects of that kind. The major limitation of these projects is that the majority of buildings are only modelled at coarse level of detail. In OSM-3D, a number of buildings are modelled in LOD2 if there are indications for their roof types (Figure 3).

The integration of more detail is conducted usually manually (LOD3 / LOD4) using other sources via OpenBuildingModels (OBM) (Uden & Zipf 2013). OBM is a web based platform for uploading and sharing entire 3D building models. More specifically, users can: (i) upload a 3D building model which is associated with a footprint in OSM, and (ii) browse, view and download an existing model in the repository. At the same time, one can add attribute information referred to the building model (Fan & Zipf, 2016).

A wide range of tools and algorithms is required in order to support a motivated mapper to collect the various 3D information in all levels of detail. Data collection for 3D modelling of simple building properties does not always require the use of high accuracy sensors since most data can be measured with the eye. More complex models can only be created by means of various sensors such as laser meters, terrestrial and/or aerial imagery, GPS or even terrestrial laser scanners. Many of these sensors are nowadays included in modern smartphones, transforming them into a multi-sensor-system which is pretty well-suited for crowdsourced 3D data capturing. In the future, further sensors like barometers, stereo cameras such as Microsoft Kinect (Elgan, 2011) and maybe also laser meters and little laser scanners will possibly be included into smartphones, making them even more all-round tools for 3D-VGI. Obviously, the creation of 3D models requires a well-suited modelling software.

There are several 3D graphics modelling software but different tools need different skills. Kendzi3D JOSM-plugin (Kendzi, 2011) is a widely used editor which is specialised for advanced OSM 3D-building modelling, makes it easier for the users to assemble parametric 3D models without caring about the rather complicated and cumbersome tagging itself. Such an editor could also avoid incorrect modelling and ensure topological consistency in complex 3D objects (Uden & Zipf, 2013). Another example of such a software is presented by Eaglin et al. (2013); the proposed system exploits the power of current mobile devices (smartphones, tablets) and their 3D graphics rendering capabilities to present a mobile application (Figure 4).

This system is based on a client-server architecture, where users of a mobile application create, submit, and vote on 3D models of building inside components; the server collects and uses votes pertaining to accuracy and completion of the model to determine if an object may be approved. The mobile application allows users to create geometry (such as chair, table etc.) through a simplified toolset and an editor. This system leads to satisfactory results.

Reconstruction of 3D buildings from terrestrial photographs can traditionally be accomplished using complex and expensive photogrammetric software. Currently, free-to-use assisted 3D modelling software like Autodesk 123D Catch or My3DScanner offer an alternative for this task.

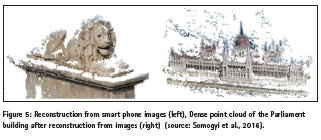

With the rapid development of technology, image sharing sites and social networks, such as Flickr, Instagram, Panoramio, Picasa, Pinterest, the finding and exploitation of crowdsourced amateur images for 3D reconstruction is enabled (Figure 5). Research has been performed over time to explore the potential of crowdsourced data in 3D reconstruction procedures (Hadjiprocopis et al., 2014; Somogyi et al., 2016; Hartmann et al., 2016).

Current algorithms and techniques in 3D reconstruction

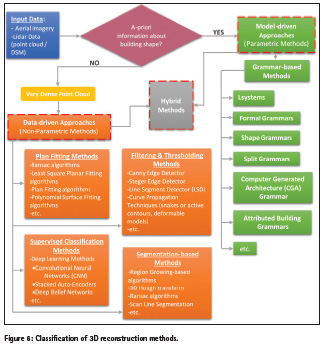

Over the last twenty years, the automatic extraction and reconstruction of 3D buildings has been a major research focus, trying to replace the manual reconstruction of buildings from aerial imagery via stereoscopy or from lidar data, which are time consuming and laborious tasks. Research has exploited both aerial imagery and lidar data for the reconstruction of 3D models at varying levels of detail. Through the various approaches that have been proposed so far only semi-automation has been achieved (Brenner, 2005). Studies which compare proposed reconstruction methods have suggested that in order to achieve full automation, aerial imagery and lidar data need to be used in synergy in order to utilize their superior positional and height resolutions respectively (Brenner, 2005). In recent years several methods have been developed for building detection, recognition and reconstruction. These methods can be divided into three general categories based on the degree of the contexual knowledge:

▪▪ Model-driven methods (parametric modeling),

▪▪ Data-driven methods (non-parametric modeling), and

▪▪ Hybrid methods.

Each one of these methods include several algorithms and techniques aiming to achieve a successful 3D building reconstruction (Figure 6).

Model-driven methods

Parametric methods or top-down approaches are based on a priori known information about the shape of the buildings. A library of parametric shapes consists of a set of predefined patterns, which are described by a number of parameters. An appropriate combination of these parameters is needed, in order to evaluate the best 3D model. The main advantages of parametric methods are the robustness in the case where the original data (exported buildings points) are incomplete and weak, and the topologically correct model output.

However, each library may not include information about all kinds of buildings geometry. That is, in case of buildings with complex geometry the reconstruction may not be complete. One of the most popular category of parametric methods is Grammar-Based methods.

Grammar-Based Methods are based on a structure, called Grammar. They use a language L (Gb), which includes all possible models (components of the building – Building Grammar). Based on Gb, a segmentation process starts, where every component is checked against a set of rules. Each building consists of individual parts, which cannot undergo a further segmentation. As such elements can be considered doors, windows, walls, etc.

Grammar-based methods have been extensively used in architecture modelling. The most well-known examples are Lsystems (Lindenmayer-systems), Shape Grammars, Split Grammar, Computer Generated Architecture (CGA) Grammar, Formal Grammars and Attributed Building Grammar (Yu et al., 2014). Lsystems, were developed for modelling plants, so they are not appropriate for the modelling of individual buildings. Shape grammars include shape rules and a generation engine that selects and processes rules.

Shape rules define how an existing shape can be transformed. Shape grammars have been successfully used in architecture (McKay et al., 2012); however, their applicability for automatic generation of buildings was limited. Karantzalos & Paragios, (2010) proposed a different approach, which utilizes the methodology of Shape Grammars. The proposed method refers to a Grammar, which is composed of a three-dimensional (3D) shape priors. According to this methodology, the choice of the most suitable model is via the optimal selection of parameters which form the shape of the building part. Wonka et al. (2003) employed a split grammar to generate architectural structures based on a large database of split grammar rules and attributes. In this approach, a split grammar is introduced to allow for dividing the building into parts, and also a separate control grammar is proposed to handle the propagation and distribution of attributes. However, due to the requirement of an excessive amount of splits for complex models, the proposed split grammars have limitations to handle the complexity of architectural details. Following this idea, a new Computer Generated Architecture (CGA) grammar (Müller et al., 2006) is presented to generate detailed building architecture in a predefined style, which is demonstrated by a virtual reconstruction of ancient Pompeii. They solely use context-sensitive shape rules to implement splits along the main axes of the facades. More recently, formal grammars have been applied in building facade modelling (Becker & Haala, 2009) to reconstruct building facades from point cloud data. Depending on the structures of facade, the façade model is defined by a formal grammar. Each grammar rule subdivides a part of the facade into smaller parts according to the layout of the facade. However, facade modelling does not consider the entire building and is limited to certain type of structures in some cases, e.g., symmetric structures. Yu et al., (2014) proposed an automated reconstruction method in order to reconstruct completely building structures.

This method was called Attributed Building Grammar. The next years, Yu et al., (2016) proposed another automated method of reconstruction of buildings using an Attributed Building Grammar, which starts with the segmentation of the initial point cloud in order to extract planar, cylindrical, and other types of surfaces, by methods such as PCA (Principal Component Analysis). The segmented data transformed into 3D shapes which represent the 3D building structures. Subsequently a Grammar engine, lead the reconstruction process, ensuring the enforcement of appropriate rules, for the further subdivision into elementary shape structures. The division is described by a tree structure, wherein the body represents each shape and the leaves represents the elementary objects. Finally, the 3D model composed of individual elementary objects which occurred through the combined use of Grammar and the corresponding rules. This methodology can be used to produce 3D models in various forms (e.g., CAD, BIM).

Data-driven methods

In the data-driven or bottom-up approaches, the points related to the roof top are extracted based on a set of building measurements and are grouped into different roof planes with 2D topology and are used for 3D modelling. The main advantage of the data driven approach is that there is no need to have a prior knowledge of a specific building structure; however, this method requires very dense collection of building’s points to reconstruct the 3D models correctly. They tend to be more flexible as they use the extracted data in order to create a roof model. The geometry of a roof can be described by the number and the shape of roof faces, thus most methods aim to classify roof planes from the input dataset. Current methodologies and algorithms on building detection and extraction problem can be divided into 4 groups as: Plan fitting based methods, Filtering and Thresholding based Methods, Segmentation based Methods (Shadow based Segmentation, Region Growing based algorithms, etc) and Different Supervised Classification Methods.

In the literature there is a variety of approaches, which apply Plan fitting based methods to data from active sensors (e.g., LIDAR Digital Surface Model – DSM) or point clouds obtained through photogrammetric processes. The algorithms widely used to achieve this goal include RANSAC algorithms, Least Squares planar fitting algorithms (Omidalizarandi & Saadatseresgt, 2013) and Plan fitting based algorithms (McClunea et al., 2016). Wang (2016), proposed an automated Semi-Global Matching (SGM) method (Hirschmüller, 2008) in order to produce a dense cloud of points and the extraction of the outline of buildings utilizing high-resolution aerial imagery. Then the extraction of the ground surface using a polynomial surface adaptation method (polynomial surface fitting) was attempted. The volumes of the buildings identified by the production of nDSM (normalized DSM) using a seed area approach with radiometric criteria or other characteristics for the further categorization of all the elements located on each building roof. The outline of the roof was extracted in vector form with the split-and-merge method. It is noted that the proposed methodology can be applied in areas with complex types of buildings but not in densely urbanized areas.

In recent literature, Filtering and Thresholding based methods, especially in case of aerial images for extracting building outlines utilizing edge detectors, are widespread. Dal Poz & Fernandes (2016) proposed a method for extracting groups of straight lines that represent roof boundaries and roof ridgelines from high-resolution aerial images using corresponding ALS (Aerial Laser Scanner) – derived roof polyhedrons as initial approximations. The proposed methodology consists of two sub-stages: (i) the boundaries of the ALS – polyhedrons used to limit the search area of candidate straight lines, and (ii) Steger line detector and Canny edge detector are applied to the images, to identify lines within the limited area of the interior of the polyhedrons.

Köhn et al. (2016) proposed a method for the detection and reconstruction of the building roof, using aerial images. A high resolution DSM was derived based on the SGM algorithm. The exterior and interior orientations of all images were estimated by a bundle adjustment using the GNSS/IMU measurements. In a first step, the DSM was normalized based on morphological grayscale reconstruction. The derived nDSM includes the volumes above the ground, identifying the potential building positions. Then the operator Line Segment Detector (LSD) is applied which identifies straight line segments using the region growing method among the pixels that exhibit similar intensity and orientation. An assumption about the buildings shape (rectangular) was made, in order to identify them. Then, a RANSAC-based plane fitting procedure is applied to the pixels in each segment by which the 3D building roofs are reconstructed. Apart from the above, Curve Propagation Techniques (snakes, geometrical snakes or active contours, deformable models) have shown encouraging results both to identify buildings and roads (Karantzalos & Argialas, 2009) and thus at the reconstruction procedures.

As concluded by Rottensteiner et al. (2014), in summarizing the outcomes of the recent ISPRS benchmark assessment of 3D building reconstruction, area-based reconstruction tends to favor the use of lidar data in the form of point clouds or raster DSMs. Segmentation based methods are widely used. Points can be clustered into planes based on similar attributes, such as: normal vectors, distance to a localized fitted plane οr height similarities. This clustering is performed using methods such as: region growing based algorithms, 3D Hough-transform or the RANSAC algorithm. While many approaches have tried to fit planes to extract surfaces, an alternative and under-explored approach is the use of cross sections for segmenting planar features. This approach is called scan line segmentation. However, these tend to be more computationally expensive compared to the planar detection due to the number of points being tested for clustering. Planar segmentation results depend on the correct determination of threshold parameters, such as the neighborhood used to calculate the attribute, and incorrect results may arise in areas with low point density and complex structures (Rottensteiner et al., 2014). While surface extraction and planar fitting approaches may accurately detect planes and perform well in the presence of noise, they tend to lead to over and under segmentation. Research has shown that Data-driven methods In the data-driven or bottom-up approaches, the points related to the roof top are extracted based on a set of building measurements and are grouped into different roof planes with 2D topology and are used for 3D modelling. The main advantage of the data driven approach is that there is no need to have a prior knowledge of a specific building structure; however, this method requires very dense collection of building’s points to reconstruct the 3D models correctly. They tend to be more flexible as they use the extracted data in order to create a roof model. The geometry of a roof can be described by the number and the shape of roof faces, thus most methods aim to classify roof planes from the input dataset. Current methodologies and algorithms on building detection and extraction problem can be divided into 4 groups as: Plan fitting based methods, Filtering and Thresholding based Methods, Segmentation based Methods (Shadow based Segmentation, Region Growing based algorithms, etc) and Different Supervised Classification Methods. In the literature there is a variety of approaches, which apply Plan fitting based methods to data from active sensors (e.g., LIDAR Digital Surface Model – DSM) or point clouds obtained through photogrammetric processes. The algorithms widely used to achieve this goal include RANSAC algorithms, Least Squares planar fitting algorithms (Omidalizarandi & Saadatseresgt, 2013) and Plan fitting based algorithms (McClunea et al., 2016). Wang (2016), proposed an automated Semi-Global Matching (SGM) method (Hirschmüller, 2008) in order to produce a dense cloud of points and the extraction of the outline of buildings utilizing high-resolution aerial imagery. Then the extraction of the ground surface using a polynomial surface adaptation method (polynomial surface fitting) was attempted. The volumes of the buildings identified by the production of nDSM (normalized DSM) using a seed area approach with radiometric criteria or other characteristics for the further categorization of all the elements located on each building roof. The outline of the roof was extracted in vector form with the split-and-merge method. It is noted that the proposed methodology can be applied in areas with complex types of buildings but not in densely urbanized areas. In recent literature, Filtering and Thresholding based methods, especially in case of aerial images for extracting building outlines utilizing edge detectors, are widespread. Dal Poz & Fernandes (2016) proposed a method for extracting groups of straight lines that represent roof boundaries and roof ridgelines from high-resolution aerial images using corresponding ALS (Aerial Laser Scanner) – derived roof polyhedrons as initial approximations. The proposed methodology consists of two sub-stages: (i) the boundaries of the ALS – polyhedrons used to limit the search area of candidate straight lines, and (ii) Steger line detector and Canny edge detector are applied to the images, to identify lines within the limited area of the interior of the polyhedrons. Köhn et al. (2016) proposed a method for the detection and reconstruction of the building roof, using aerial images. A high resolution DSM was derived based on the SGM algorithm. The exterior and interior orientations of all images were estimated by a bundle adjustment using the GNSS/IMU measurements. In a first step, the DSM was normalized based on morphological grayscale reconstruction. The derived nDSM includes the volumes above the ground, identifying the potential building positions. Then the operator Line Segment Detector (LSD) is applied which identifies straight line segments using the region growing method among the pixels that exhibit similar intensity and orientation. An assumption about the buildings shape (rectangular) was made, in order to identify them. Then, a RANSAC-based plane fitting procedure is applied to the pixels in each segment by which the 3D building roofs are reconstructed. Apart from the above, Curve Propagation Techniques (snakes, geometrical snakes or active contours, deformable models) have shown encouraging results both to identify buildings and roads (Karantzalos & Argialas, 2009) and thus at the reconstruction procedures. As concluded by Rottensteiner et al. (2014), in summarizing the outcomes of the recent ISPRS benchmark assessment of 3D building reconstruction, area-based reconstruction tends to favor the use of lidar data in the form of point clouds or raster DSMs. Segmentation based methods are widely used. Points can be clustered into planes based on similar attributes, such as: normal vectors, distance to a localized fitted plane οr height similarities. This clustering is performed using methods such as: region growing based algorithms, 3D Hough-transform or the RANSAC algorithm. While many approaches have tried to fit planes to extract surfaces, an alternative and under-explored approach is the use of cross sections for segmenting planar features. This approach is called scan line segmentation. However, these tend to be more computationally expensive compared to the planar detection due to the number of points being tested for clustering. Planar segmentation results depend on the correct determination of threshold parameters, such as the neighborhood used to calculate the attribute, and incorrect results may arise in areas with low point density and complex structures (Rottensteiner et al., 2014). While surface extraction and planar fitting approaches may accurately detect planes and perform well in the presence of noise, they tend to lead to over and under segmentation. Research has shown that planes can be extracted by segmenting along cross sections of a surface and then performing region growing. McClune et al. (2014) proposed a methodology to derive the geometry of building boundaries using aerial images. At first the roof level is identified using the DSM. By means of along cross sections method, the 2D sections height differences are examined using the DSM. The parts with intense height differences are usually sections of roof boundaries. Canny edge detector used in order to find additional roof features. Omidalizarandi & Saadatseresgt (2013) performed region growing on image based point clouds to form planar segments. However, it was found that errors from planar segmentation may arise at the location of the planar boundaries. These boundary errors can be overcome by combining feature-based and area-based methods, with the extraction of edges from imagery tending to form a post-processing step to refine the boundary of planes from lidar data. Besides aerial or LIDAR data, mobile-phone images are recently used for photogrammetric reconstruction.

Supervised classification methods, such as Deep Learning methods (Makantasis et al., 2015) are a class of machines that can learn a hierarchy of features by building high-level features from low-level ones, thereby automating the process of feature construction for the problem at hand. As examples of Deep Learning models the Convolutional Neural Networks – CNN, the Stacked Auto-Encoders and the Deep Belief Networks may be mentioned. CNNs consist a type of deep models, which apply trainable filters and pooling operations on the raw input, resulting in a hierarchy of increasingly complex features. Generally, there are two common approaches for training a CNN model, such as training from scratch with random values of weights, as well as fine-tuning of a pre-trained model. CNN is a kind of feed-forward neural network with the multilayer perception concept which consists of a number of convolutional and subsampling layers in an adaptable structure and it is widely used in pattern recognition and object detection application. In literature, there is a limited number of studies on the detection and identification of 3D structures based on CNNs using remote sensing data. Alexandre (2016) developed a 3D object recognition method based on CNNs by using RGB-Depth data. In this method, a CNN is trained for each image band (red, green, blue and depth) separately such that the weights vector for each CNN will be initialized with the weights vector of the trained CNN for another image band; that is, the knowledge is transferred between CNNs for RGB-D data.

Many researchers have used multi-class Support Vector Machine (SVM) classification for land use detection of urban areas from aerial or high-resolution satellite images. SVM is a supervised non-parametric statistical learning technique (Vapnik, 1995; Burges, 1998). SVM need training data that optimize the separation of the classes rather than describing the classes themselves. Training the SVM with a Gaussian Radial Basis Function (RBF) requires setting two parameters: regularization parameter that controls the trade-off between maximizing the margin and minimizing the training error, and kernel width. SVM classifier provides four types of kernels: linear, polynomial, RBF, and sigmoid (Sarp et al., 2014). The RBF kernel works well in most cases. Tuia et al. (2010) performed SVM classification using composite kernels for the classification of high-resolution urban images and concluded that a significant increase in the classification accuracy was achieved when the spatial information was used. Sarp et al. (2014) proposed a method for the automatic detection of buildings and changes in buildings after an earthquake, utilizing orthophoto images and point clouds from stereo matching data. In the first step the classification of the high-resolution pre- and post-event Red-Green-Blue (RGB) orthophoto images (ortho RGB) conducted, using SVM classification procedure to extract the building areas. In the second step, a normalized Digital Surface Model (nDSM) band derived from point clouds and Digital Terrain Model (DTM) is integrated with the SVM classification (nDSM + orthoRGB). Finally, a building damage assessment is performed through a comparison between two independent classification results from pre- and post-event data. The main conclusion was that using the spectral information as well as the elevation information from point cloud as additional bands, leads to a significant accuracy increasment. Vakalopoulou et al. (2015) developed a method of locating buildings from high resolution satellite images. In this process vector features used to train a binary SVM classifier to separate building and non-building objects. Then, a MRF model was used to extract the best classification results. Zhang et al. (2015) proposed two search algorithms to localize objects with high accuracy based on Bayesian optimization and also a deep learning framework based on a structured SVM objective function and CNN classifier. The results on PASCAL VOC 2007 and 2012 benchmarks highlight the significant improvement on detection performance. Alidoost & Arefi (2016) proposed a new approach for the automatic recognition of the building roof models (such as flat, gable, gabled, hipped, etc) based on Deep Learning methods using LIDAR data and aerial orthophotos. In the last group of modelling approaches, the combination of model-driven and data-driven algorithms is used to have an optimal solution compensating the weakness of each method. Occasionally multitude investigations about Hybrid methods are conducted, resulting in satisfactory results (Nguatem et al., 2016)

References

Alexandre, L.A., 2016. 3D object recognition using convolutional neural networks with transfer learning between input channels. In: Intelligent Autonomous Systems 13, Springer International Publishing, pp. 889-898.

Alidoost, F. & Arefi, H., 2016. Knowledge Based 3d Building Model Recognition Using Convolutional Neural Networks from LIDAR and Aerial Imageries. In: ISPRS International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XLI-B3, pp. 833-840.

Becker, S. & Haala, N., 2009. Grammar supported facade reconstruction from mobile lidar mapping. In: ISPRS Workshop, CMRT09-City Models, Roads and Traffic, vol. 38, 13 p.

Brenner, C., 2005. Building reconstruction from images and laser scanning. In: Int. J. Appl. Earth Obs. Geoinf., vol. 6(¾), pp. 187–198.

Burges, C.J.C., 1998. A tutorial on support vector machines for pattern recognition. Data Mining and Knowledge Discovery, vol.2, pp.121-167. doi: http://dx.doi.org/10.1023/ A:1009715923555.

Dimopoulou, E., Karki, S., Miodrag, R., Almeida, J.P.D., Griffith-Charles, C., Thompson, R., Ying, S. & Oosterom, P., 2016. Initial Registration of 3D Parcels. In: 5th International FIG 3D Cadastre Workshop, Athens, Greece, pp. 105-132.

Elgan, M., 2011. Kinect: Microsoft’s accidental success story. http://www.computerworld.com/s/article/9217737/Kinect_Microsoft_s_accidental_success_st ory (accessed 11/01/2017). Fan, H. & Zipf, A., 2016. Modelling the world in 3D from VGI/Crowdsourced data. In: Capineri, C, Haklay, M, Huang, H, Antoniou, V, Kettunen, J, Ostermann, F and Purves, R. (eds.) European Handbook of Crowdsourced Geographic Information, pp. 435–446, London: Ubiquity Press.

Goodchild, M.F., 2007a. Citizens as sensors: the world of volunteered geography. GeoJournal, vol. 69(4), pp. 211–221.

Goodchild, M.F., 2007b. Citizens as Voluntary Sensors: Spatial Data Infrastructure in the World of Web 2.0. International Journal of Spatial Data Infrastructures Research, vol. 2, pp. 24–32.

Gröger, G., Kolbe, T. H., Czerwinski, A. & Nagel, C., 2008. OpenGIS® City Geography Markup Language (CityGML) Implementation Specification. Available at: www.opengeospatial.org/legal/.

Hadjiprocopis, A., Ioannides, M., Wenzel, K., Rothermel, M., Johnsons, P. S., Fritsch, D. & Weinlinger, G., 2014. 4D reconstruction of the past: the image retrieval and 3D model construction pipeline. In: Second International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2014), International Society for Optics and Photonics, vol. 9229, 16 p., doi:10.1117/12.2065950.

Hartmann, W., Havlena, M. & Schindler, K., 2016. Towards complete, geo-referenced 3d models from crowd-sourced amateur images. In: ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. III, pp.51-58.

Karantzalos, K. & Argialas, D., 2009. A region-based level set segmentation for automatic detection of man-made objects from aerial and satellite images. Photogrammetric Engineering & Remote Sensing, vol. 75(6), pp. 667-677.

Karantzalos, K. & Paragios, N., 2010. Large-scale building reconstruction through information fusion and 3-d priors. IEEE Transactions on Geoscience and Remote Sensing, vol. 48(5), pp. 2283-2296.

Kendzi, 2011. 3d plug-in for josm. http://wiki.openstreetmap.org/wiki/Kendzi3d (accessed 10/01/2017).

Köhn, A., Tian, J. & Kurz, F., 2016. Automatic Building Extraction and Roof Reconstruction in 3k Imagery Based on Line Segments. In: ISPRS International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XLI-B3,p. 625-631.

Leberl, F., 2010. Time for neo-photogrammetry. GIS Development, vol. 14(2), pp. 22-24.

McKay, A., Chase, S.C., Shea, K. & Chau, H.H., 2012. Spatial grammar implementation: From theory to useable software. AI EDAM (Artificial Intelligence for Engineering Design, Analysis and Manufacturing), vol. 26, pp. 143–159.

Makantasis, K., Karantzalos, K., Doulamis, A. & Loupos, K., 2015. Deep Learning-Based Man-Made Object Detection from Hyperspectral Data. In: International Symposium on Visual Computing, Springer International Publishing, pp. 717-727

McClunea, A.P., Millsa, J.P., Millerb, P.E. & Hollandc, D.A., 2016. Automatic 3d Building Reconstruction from a Dense Image Matching Dataset. In: ISPRS International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XLI-B3, pp. 641-648.

Müller, P., Wonka, P., Haegler, S., Ulmer, A., van Gool, L., 2006. Procedural modeling of buildings. In: ACM Transactions on Graphics (Tog), vol. 25(3), pp. 614-623, doi:10.1145/1179352.1141931. 3.

Nguatem, W., Drauschke, M. & Mayer H., 2016. Automatic Generation of Building Models with Levels of Detail 1-3. In: ISPRS International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XLI-B3, pp. 649-654.

Omidalizarandi, M. & Saadatseresgt, M., 2013. Segmentation and Calssification of Point Clouds from Dense Aerial Image Matching. The International Journal of Multimedia & Its Applications, vol. 5-4, pp. 33-50.

OSM, 2016. OpenStreetMap Wiki. http://wiki.openstreetmap.org/wiki/Statistics (accessed 28/12/2016).

Over, M., Schilling, A., Neubauer, S. & Zipf, A. 2010. Generating web-based 3D City Models from OpenStreetMap: The current situation in Germany. Computer Environment and Urban System (CEUS), vol. 34(6), pp. 496–507.

Rottensteiner, F., Sohn, G., Gerke, M., Wegner, J.D., Breitkopf, U. & Jung, J., 2014. Results of the ISPRS benchmark on urban object detection and 3D building reconstruction. ISPRS Journal of Photogrammetry and Remote Sensing, vol. 93, pp. 256-271.

Sarp, G., Erener, A., Duzgun, S., & Sahin, K., 2014. An approach for detection of buildings and changes in buildings using orthophotos and point clouds: A case study of Van Erciş earthquake. European Journal of Remote Sensing 2014, vol.47, pp.627-642.

Somogyi, A., Barsi, A., Molnar, B. & Lovas, T., 2016. Crowdsourcing based 3d modeling. International Archives of Photogrammetry, Remote Sensing & Spatial Information Sciences, vol. 41-B5, pp. 587-590.

Uden, M. & Zipf, A., 2013. Open building models: Towards a platform for crowdsourcing virtual 3D cities. In: Progress and New Trends in 3D Geoinformation Sciences, pp. 299-314, Springer Berlin Heidelberg.

Vakalopoulou, M., Karantzalos, K., Komodakis, N. & Paragios, N., 2015. Building detection in very high resolution multispectral data with deep learning features. In: IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, pp. 1873-1876.

Vapnik, V.N., 1995. The Nature of Statistical Learning Theory. New York: Springer Verlag. doi: http://dx.doi.org/10.1007/978-1-4757-2440-0.

Wang, J., Wang, W., Li, X., Cao, Z., Zhu, H., Li, M., He, B., Zhao, Z., 2016. Line Matching Algorithm for Aerial Image Combining image and object space similarity constraints. In: ISPRS International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XLI-B3, pp. 783-788.

Yu, Q., Helmholz, P. & Belton, D., 2016. Evaluation of Model Recognition for Grammar-Based Automatic 3d Building Model Reconstruction. In: ISPRS International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XLI-B4, pp. 63-69.

Yu, Q., Helmholz, P., Belton, D. & West, G., 2014. Grammar-based Automatic 3D Model Reconstruction from Terrestrial Laser Scanning Data. In: ISPRS International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XL-4, pp. 335-340.

Zhang, Y., Sohn, K., Villegas, R., Pan, G., & Lee, H., 2015. Improving object detection with deep convolutional networks via bayesian optimization and structured prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp.249-258.

# to be countinued in next issue.

The paper was presented at FIG Working Week 2017, Helsinki, Finland, 29 May – 2 June 2017.

(9 votes, average: 4.56 out of 5)

(9 votes, average: 4.56 out of 5)

Leave your response!