| Mapping | |

Segmentation of 3D photogrammetric point cloud

This study presents research in progress, which focuses on the segmentation and classification of 3D point clouds and orthoimages to generate 3D urban models |

|

|

|

|

3D modeling of cities has become very important as these models are being used in different studies including energy management, visibility analysis, 3D cadastre, urban planning, change detection, disaster management, etc. (Biljecki et al., 2015). 3D building models can be considered as one of the most important entities in the 3D city models and there are numerous ongoing studies from different disciplines, including vast majority of researchers from geomatics and computer sciences.

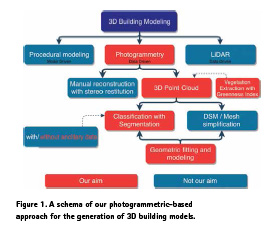

The two main concepts of reconstructing 3D building models can be given as procedural modeling (Musialski et al., 2013; Parish and Müller, 2001) and realitybased modeling (Toschi et al., 2017a), the latter including photogrammetry and Airborne Laser Scanning (Fig. 1). The concept of procedural modeling is based on creating rules (procedures) that reconstruct 3D models automatically (i.e. dimensions and location of starting point of a rectangular prism). On the other hand, reality-based modeling approaches rely on data gathered with 3D surveying techniques to derive 3D geometries from surveyed data. While procedural modeling concept holds the main advantages of data compression and savings from hardware usage, it comes at two important costs, i.e. low metric accuracy and issues with control ability on the model, especially for complex structures.

There are many approaches presented in the literature for 3D building modeling, which rely on point clouds (Haala and Kada, 2010; He et al., 2012; Lafarge and Mallet, 2012; Sampath and Shan, 2010), often coupled with ancillary data such as building footprints. However, reliable footprints are not always available. Moreover, these existing methodologies are not found to be fully exploiting the accuracy potential of sensor data (Rottensteiner et al., 2014). For these reasons, we are motivated to develop a methodology to reconstruct 3D building models without relying on such ancillary data. The method focuses on the segmentation of photogrammetric point clouds and RGB orthophotos for the successive reconstruction of 3D building models. Using a semi-automated approach, we detect vegetation (and/or other) classes on the image and mask/ separate these regions in the point cloud.

Therefore, it becomes easier to process the rest of the point cloud for a segmentation and classification in order to extract the buildings from the point cloud. The paper proposes a methodology to extract and model buildings from photogrammetric point clouds segmented with the support of orthophoto. After a review of related works, the developed methodology is presented. Results are discussed at the end of the paper.

Related work

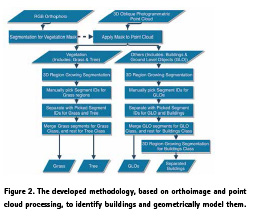

In the last years, thanks to the availability of dense point clouds coming from LiDAR sensors or automated image matching (Remondino et al., 2014), there have been many studies on 3D building reconstruction from dense point clouds. Most of them are based on extraction of roofs, generally using ancillary data such as building footprints, and then fitting geometric primitives (Dorninger and Pfeifer, 2008; Malihi et al., 2016; Vosselman and Dijkman, 2001; Xiong et al., 2014). As our approach (Fig. 2) is based on orthophoto and point cloud segmentation, in the next sections, a state-of the-art of such methods is shortly given.

Image segmentation

The automatic analysis and segmentation of terrestrial, aerial and satellite 2D images into semantically defined classes (often referred to as “image classification” or “semantic labeling”) has been an active area of research for photogrammetry, remote sensing and computer vision scientist since more than 30 years (Bajcsy and Tavakoli, 1976; Chaudhuri et al., 2018; Duarte et al., 2017; Kluckner et al., 2009; Teboul et al., 2010; Tokarczyk et al., 2015). In the literature image classification methods are normally divided in per-pixel approaches vs object-based analyses (the latter often called GEOBIA – Geographic Object Based Image Analysis) (Blaschke et al., 2014; Morel and Solimini, 2012). Semantically interpreted images, i.e. thematic raster maps of building envelopes, forests or entire urban areas are important for many tasks, such as mapping and navigation, trip planning, urban planning, environmental monitoring, traffic analysis, road network extraction, change detection, restoration, etc. In spite of a large number of publications, the task is far from solved. Indeed, Earth areas exhibit a large variety of reflectance patterns, with large intraclass variations and often also low interclass variation (Montoya-Zegarra et al., 2015). The situation gets even more challenging when dealing with high-resolution aerial (less than 20 cm) and terrestrial images where intra-class variability increases as many more small objects (street elements, façade structures, road signs and markings, car details, etc.) are visible. Segmentation and classification of 2D (geospatial) data is normally performed with data-driven / supervised approaches – based on classifiers like random forests, Markov Random Field (MRF), Conditional Random Fields (CRF), Support Vector Machines (SVM), Conditional Random Field (CNN), AdaBoost, maximum likelihood classifier, etc. (Schindler, 2012) – or unsupervised approaches based on K-means, Fuzzy c-means, AUTOCLASS, DBSCAN or expectation maximization (Estivill-Castro, 2002).

Point cloud segmentation

Point cloud segmentation is another challenging segmentation task as in the most cases there is vast amount of complex data. There have been different methodologies developed in order to solve this difficult task (Nguyen and Le, 2013; Woo et al., 2002). While some methodologies are developed with machine learning approach (Hackel et al., 2017; Kanezaki et al., 2016; Qi et al., 2017; Wu et al., 2015), some others relied on the geometric calculations, such as sample consensus based (Fischler and Bolles, 1981), combining images and 3D data (Adam et al., 2018), or region growing algorithms (Ushakov, 2018). There are also various studies on classification of aerial photogrammetric 3D point clouds (Becker et al., 2017), segmentation of unstructured point clouds (Dorninger and Nothegger, 2007), and some studies on segmentation of LiDAR point clouds as well (Douillard et al., 2011; Macher et al., 2017; Ramiya et al., 2017).

3D building models

Our aim is to reconstruct Level of Detail 2 (LoD2, (Biljecki et al., 2016)) 3D building models with optimum number of vertexes. For this reason, we are not using a method that creates a mesh using all the available points in the point cloud. Instead, we prefer to employ a method that generates lightweight polygonal surfaces. In the literature there are different kind of 3D building reconstruction methodologies, which we could classify based on the used data: footprints (Müler et al., 2006), sparse point clouds, procedural modeling (Müller et al., 2007; Parish and Müller, 2001; Vanegas et al., 2010), combined methodologies (Müller Arisona et al., 2013), hybrid representation (Hu et al., 2018) and deep learning approaches (Wichmann et al., 2018).

Data and methodology

We propose an automated methodology that aims to extract buildings from photogrammetric point clouds for 3D reconstruction purposes. The method combines a series of processes including: (i) vegetation masking through orthoimage segmentation, (ii) point cloud segmentation (vegetation, buildings, streets, ground level objects – GLO) with the aid of the image masking results and (iii) 3D reconstruction of the building class.

Employed data

We used two different datasets for developing and testing our method.

The first is derived from ISPRS benchmark dataset of Dortmund City Center (Nex et al., 2015). As the original dataset contains data from different sensors including terrestrial and aerial laser scanners, we only used the point cloud generated using oblique images acquired with the IGI PentaCam, with the GSD of 10cm in the nadir images, and 8-12cm in the oblique views. The average density of the cloud is ca 50 pts/sqm.

The second dataset was flown over the city of Bergamo (Italy) with a Vexcel UltraCam Osprey Prime by AVT Terramessflug, with average GSD of 12cm for both nadir and oblique images (Gerke et al., 2016; Toschi et al., 2017b). The resulting dense point cloud has an average density of 30 pts/sqm.

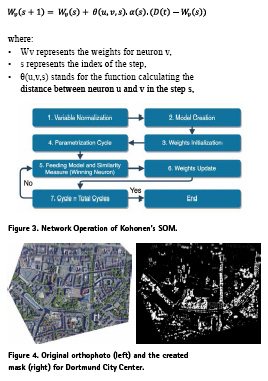

Segmentation of the orthophoto

We used Kohonen’s Self-Organizing Map (SOM, Fig. 3), which is an Artificial Neural Network (ANN) designed for clustering the given data into number of clusters that is defined by the number of layers (González-Cuéllar and Obregón-Neira, 2013). By its design, SOM has an unsupervised approach for the training and with this training the network generates a map of the given data (Kohonen et al., 2001). The neuron’s weights are calculated as:

The datasets used in our tests have very high-resolutions although, in most cases, pixel-based segmentation and classification are not ideal for such imagery. Yet, as our image segmentation goal is to separate only the vegetation from the others, pixel-based segmentation met our need. In order to segment the orthophotos, a SOM network is generated with 9 layers and image data is prepared as a data matrix, where each row vector of the matrix represents one band of the image. The original orthophoto and the generated vegetation masks for the Dortmund dataset are shown in Figure 4.

Segmentation of the 3D point cloud

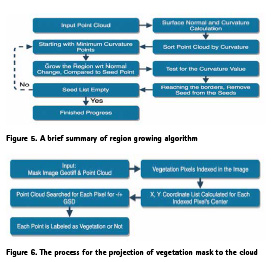

The region growing segmentation algorithm built-in Point Cloud Library (PCL) (Rusu and Cousins, 2011) is used in order to segment 3D point cloud with the aim of classification into buildings and GLOs. The algorithm (Fig. 5) basically detects points which are generating a smooth surface if they gather together, and this is decided by comparing the surface normal of the neighbour points.

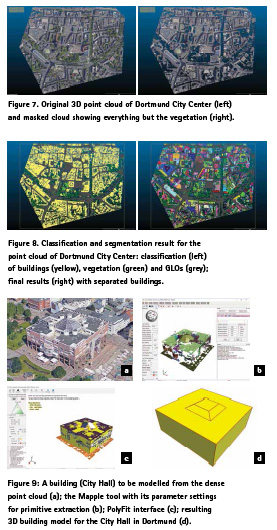

In order to make this comparison, the algorithm first calculates the curvature values for each point, which is based on normal. As the points with minimum curvature are placed in planar regions, all the points are sorted with respect to their curvature values in order to detect the seed points with minimum curvatures. The points are labelled till there are no unlabelled points left. Before applying the region growing segmentation to the point cloud, we project the vegetation mask previously generated onto the point cloud (Fig. 6). This allow to label points as vegetation or non- vegetation and to automatically generate a masked 3D point cloud (Fig. 7).

The region growing algorithm is then applied to the masked point cloud, adjusting minimum-maximum number of points per cluster, normal change threshold as well as curvature threshold. This allow to distinguish buildings, streets and GLO assigning a different ID per point. Merging all segments, a classified and segmented point cloud is obtained (Fig. 8).

3D building modeling

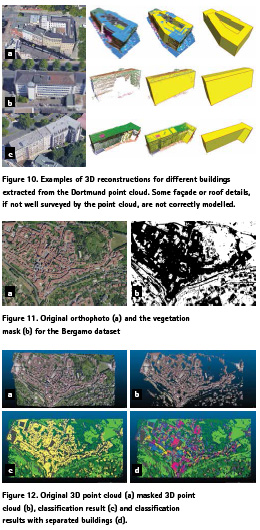

Once building structures are identified in the point cloud, the geometric modeling is performed using Mapple (Nan, 2018) and PolyFit (Nan and Wonka, 2017) tools. Mapple is a generic point cloud tool that can handle normal estimation, down sampling, interactive editing and other functions. Mapple is used to extract planar segments from the point cloud based on RANSAC algorithm (Fig. 9a). Then, accepting these preliminary planes as candidate faces, PolyFit, creates an optimized subset based on angle between adjacent planes (θ<10°), and minimum number of points can support both of the segments (select minimum of number of points in segment 1 and 2, divide this amount by 5). Using this optimum subset of faces, a face selection is performed (Fig. 9c) based on the following parameters;

– Fitting, i.e. a measure for the fitting quality between the point cloud and the faces, calculated with respect to the percentage of points that are not used for the final model;

– Point coverage, i.e. a fraction related to bare areas in the model, calculated with respect to the surface areas, candidate faces and 2D α-shapes, which is basically a projection of points to the plane;

– Model complexity, i.e. a term to consider the holes and outgrowths, calculated as a ratio of sharp edges and total amount of intersections of the pairs.

These parameters can be adjusted in an iterative way during the 3D reconstruction process, which includes refinement, hypothesizing, confidence calculations and optimization procedures. Figure 9d and Figure 10 show examples of derived 3D building models from the Dortmund point cloud.

Further results

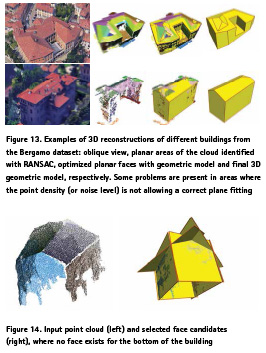

The proposed methodology, which includes automated image segmentation for vegetation mask generation, separation of the point cloud using this mask, segmentation and classification of the separated point clouds, and 3D reconstruction, was tested also on the Bergamo datasets. The given orthophoto and generated vegetation mask are shown in the Figure 12b whereas the application of the image mask to the dense point cloud produced the segmented point cloud of Figure 12b. Separation of the vegetation makes it easier for the following steps of point cloud segmentation and classification. The classification results shown in Figure 12c-d, and 3D building reconstruction results shown in Figure 13 demonstrate that our methodology also provided significant results in case of dense urban areas. Yet, we faced some cases where we could not manage to reconstruct the building due to a lack of points representing the ground level. An example can be seen in Figure 14.

Conclusions

The paper reported an ongoing work for the identification and modelling of buildings in photogrammetric point clouds, without the aid of ancillary information such as footprints. The achieved results show that pixelbased orthophoto segmentation is successful even for high-resolution images to generate a vegetation mask. Such mask aids the classification of point clouds to identify man-made structures. The point cloud segmentation approach, based on region growing algorithm, shows that this method can be a proper way to distinguish objects within the point cloud (i.e. building roofs, facades, roads, pavements, trees, grass areas), thus, useful for classification and modelling purposes. The geometric reconstruction of buildings, based on RANSAC and plane fitting, produced successful results although, in case of low points on facades or roofs, the modelling is not completely correct.

Among all processes, there are two main tasks handled manually at the moment: the setting of the region growing parameters, and the setting of segment numbers from the point clouds after segmentation for merging them. However, as this is an ongoing research, these two steps are going to be automated in the future. As other future works, we would like to bring all functionalities into one environment and upscale the methodology to an entire city. Acknowlegments The authors are thankful to Liangliang Nan (3D Geoinformatics group, TU Delft, The Netherlands) for his kind support.

References

Adam, A., Chatzilari, E., Nikolopoulos, S., Kompatsiaris, I., 2018. H-Ransac: A Hybrid Point Cloud Segmentation Combining 2D and 3D Data. ISPRS Annals of Photogrammetry, Remote Sensing & Spatial Information Sciences 4.

Bajcsy, R., Tavakoli, M., 1976. Computer Recognition of Roads from Satellite Pictures. IEEE Transactions on Systems, Man, and Cybernetics, 623-637.

Becker, C., Häni, N., Rosinskaya, E., d’Angelo, E., Strecha, C., 2017. Classification of Aerial Photogrammetric 3D Point Clouds. arXiv preprint arXiv:1705.08374.

Biljecki, F., Ledoux, H., Stoter, J., 2016. An Improved LOD Specification for 3D Building Models. Computers Environment and Urban Systems 59, 25-37.

Biljecki, F., Stoter, J., Ledoux, H., Zlatanova, S., Coltekin, A., 2015. Applications of 3D City Models: State of the Art Review. Isprs International Journal of Geo-Information 4, 2842-2889.

Blaschke, T., Hay, G.J., Kelly, M., Lang, S., Hofmann, P., Addink, E., Feitosa, R.Q., Van der Meer, F., Van der Werff, H., Van Coillie, F., 2014. Geographic Object- Based Image Analysis–Towards A New Paradigm. ISPRS journal of photogrammetry and remote sensing 87, 180-191.

Chaudhuri, B., Demir, B., Chaudhuri, S., Bruzzone, L., 2018. Multilabel Remote Sensing Image Retrieval Using a Semisupervised Graph-Theoretic Method. IEEE Transactions on Geoscience and Remote Sensing 56, 1144-1158.

Dorninger, P., Nothegger, C., 2007. 3D Segmentation of Unstructured Point Clouds for Building Modelling. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 35, 191-196.

Dorninger, P., Pfeifer, N., 2008. A Comprehensive Automated 3D Approach for Building Extraction, Reconstruction, and Regularization from Airborne Laser Scanning Point Clouds. Sensors 8, 7323-7343.

Douillard, B., Underwood, J., Kuntz, N., Vlaskine, V., Quadros, A., Morton, P., Frenkel, A., 2011. On the Segmentation of 3D LIDAR Point Clouds, Robotics and Automation (ICRA), 2011 IEEE International Conference on. IEEE, pp. 2798-2805.

Duarte, D., Nex, F., Kerle, N., Vosselman, G., 2017. Towards a More Efficient Detection of Earthquake Induced FAÇADE Damages Using Oblique Uav Imagery. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci 42, W6. Estivill-Castro, V., 2002. Why So Many Clustering Algorithms: A Position Paper. ACM SIGKDD explorations newsletter 4, 65-75.

Fischler, M.A., Bolles, R.C., 1981. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 24, 381-395.

Gerke, M., Nex, F., Remondino, F., Jacobsen, K., Kremer, J., Karel, W., Huf, H., Ostrowski, W., 2016. Orientation of Oblique Airborne Image Sets-Experiences from the ISPRS/ EuroSDR Benchmark on Multi-Platform Photogrammetry. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences-ISPRS Archives 41 (2016) 41, 185-191.

González-Cuéllar, F., Obregón-Neira, N., 2013. Self-Organizing Maps of Kohonen As a River Clustering Tool within the Methodology for Determining Regional Ecological Flows ELOHA. Ingeniería y Universidad 17, 311-323.

Haala, N., Kada, M., 2010. An Update on Automatic 3D Building Reconstruction. Isprs Journal of Photogrammetry and Remote Sensing 65, 570-580.

Hackel, T., Savinov, N., Ladicky, L., Wegner, J.D., Schindler, K., Pollefeys, M., 2017. Semantic3D. Net: A New Large-Scale Point Cloud Classification Benchmark. arXiv preprint arXiv:1704.03847.

He, S., Moreau, G., Martin, J.- Y., 2012. Footprint-Based 3d Generalization of Building Groups for Virtual City Visualization. Proceedings of the GEO Processing.

Hu, P., Dong, Z., Yuan, P., Liang, F., Yang, B., 2018. Reconstruction of 3D Models From Point Clouds With Hybrid Representation. International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences 42.

Kanezaki, A., Matsushita, Y., Nishida, Y., 2016. RotationNet: Joint Object Categorization and Pose Estimation Using Multiviews from Unsupervised Viewpoints. arXiv preprint arXiv:1603.06208.

Kluckner, S., Mauthner, T., Roth, P.M., Bischof, H., 2009. Semantic Classification in Aerial Imagery by Integrating Appearance and Height Information, Asian Conference on Computer Vision. Springer, pp. 477-488.

Kohonen, T., Schroeder, M., Huang, T., Maps, S.-O., 2001. Self-Organizing Maps. Inc., Secaucus, NJ 43.

Lafarge, F., Mallet, C., 2012. Creating Large-Scale City Models From 3D-Point Clouds: A Robust Approach with Hybrid Representation. International journal of computer vision 99, 69-85.

Macher, H., Landes, T., Grussenmeyer, P., 2017. From Point Clouds to Building Information Models: 3D Semi-Automatic Reconstruction of Indoors of Existing Buildings. Applied Sciences 7, 1030.

Malihi, S., Zoej, M.V., Hahn, M., Mokhtarzade, M., Arefi, H., 2016. 3D Building Reconstruction Using Dense Photogrammetric Point Cloud. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic 3, 71-74.

Montoya-Zegarra, J.A., Wegner, J.D., Ladický, L., Schindler, K., 2015. Semantic Segmentation of Aerial Images In Urban Areas With Class-Specific Higher- Order Cliques. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2, 127.

Morel, J.-M., Solimini, S., 2012. Variational Methods in Image Segmentation: with Seven Image Processing Experiments. Springer Science & Business Media.

Müller Arisona, S., Zhong, C., Huang, X., Qin, R., 2013. Increasing Detail of 3D Models Through Combined Photogrammetric and Procedural Modelling. Geo-spatial Information Science 16, 45-53.

Müller, P., Wonka, P., Haegler, S., Ulmer, A., Van Gool, L., 2006. Procedural Modeling of Buildings, Acm Transactions On Graphics (Tog). ACM, pp. 614-623.

Müller, P., Zeng, G., Wonka, P., Van Gool, L., 2007. Image-based Procedural Modeling of Facades, ACM Transactions on Graphics (TOG). ACM, p. 85.

Musialski, P., Wonka, P., Aliaga, D.G., Wimmer, M., Van Gool, L., Purgathofer, W., 2013. A Survey of Urban Reconstruction, Computer graphics forum. Wiley Online Library, pp. 146-177.

Nan, L., 2018. Software & code – Liangliang Nan, https://3d.bk.tudelft. nl/liangliang/software.html.

Nan, L., Wonka, P., 2017. PolyFit: Polygonal Surface Reconstruction from Point Clouds, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Venice, Italy, pp. 2353-2361.

Nex, F., Remondino, F., Gerke, M., Przybilla, H.-J., Bäumker, M., Zurhorst, A., 2015. ISPRS Benchmark for Multi- Platform Photogrammetry. ISPRS Annals of Photogrammetry, Remote Sensing & Spatial Information Sciences 2. Nguyen, A., Le, B., 2013. 3D Point Cloud Segmentation: A Survey, RAM, pp. 225-230.

Parish, Y.I., Müller, P., 2001. Procedural Modeling of Cities, Proceedings of the 28th annual conference on Computer graphics and interactive techniques. ACM, pp. 301-308.

Qi, C.R., Yi, L., Su, H., Guibas, L.J., 2017. Pointnet++: Deep Hierarchical Feature Learning On Point Sets In A Metric Space, Advances in Neural Information Processing Systems, pp. 5099-5108.

Ramiya, A.M., Nidamanuri, R.R., Krishnan, R., 2017. Segmentation Based Building Detection Approach from Lidar Point Cloud. The Egyptian Journal of Remote Sensing and Space Science 20, 71-77.

Remondino, F., Spera, M.G., Nocerino, E., Menna, F., Nex, F., 2014: State of the art in high density image matching. The Photogrammetric Record, Vol. 29(146), pp. 144-166.

Rottensteiner, F., Sohn, G., Gerke, M., Wegner, J.D., Breitkopf, U., Jung, J., 2014. Results of the ISPRS benchmark on urban object detection and 3D building reconstruction. Isprs Journal of Photogrammetry and Remote Sensing 93, 256-271.

Rusu, R.B., Cousins, S., 2011. 3D is here: Point cloud library (PCL), Robotics and automation (ICRA), 2011 IEEE International Conference on. IEEE, pp. 1-4.

Sampath, A., Shan, J., 2010. Segmentation and Reconstruction Of Polyhedral Building Roofs from Aerial Lidar Point Clouds. IEEE Transactions on geoscience and remote sensing 48, 1554-1567.

Schindler, K., 2012. An Overview and Comparison of Smooth Labeling Methods for Land-Cover Classification. IEEE transactions on geoscience and remote sensing 50, 4534-4545.

Teboul, O., Simon, L., Koutsourakis, P., Paragios, N., 2010. Segmentation of Building Facades Using Procedural Shape Priors. Tokarczyk, P., Wegner, J.D., Walk, S., Schindler, K., 2015. Features, Color Spaces, And Boosting: New Insights on Semantic Classification of Remote Sensing Images. IEEE Transactions on Geoscience and Remote Sensing 53, 280-295.

Toschi, I., Nocerino, E., Remondino, F., Revolti, A., Soria, G., Piffer, S., 2017a. Geospatial Data Processing for 3D City Model Generation, Management And Visualization. International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences 42. Toschi, I., Ramos, M., Nocerino, E., Menna, F., Remondino, F., Moe, K., Poli, D., Legat, K., Fassi, F., 2017b. Oblique Photogrammetry Supporting 3D Urban Reconstruction of Complex Scenarios. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 519-526.

Ushakov, S., 2018. Region Growing Segmentation, http://pointclouds.org.

Vanegas, C.A., Aliaga, D.G., Benes, B., 2010. Building Reconstruction using Manhattan-World Grammars. 2010 Ieee Conference on Computer Vision and Pattern Recognition (Cvpr), 358-365.

Vosselman, G., Dijkman, S., 2001. 3D Building Model Reconstruction from Point Clouds and Ground Plans. International archives of photogrammetry remote sensing and spatial information sciences 34, 37-44.

Wichmann, A., Agoub, A., Kada, M., 2018. Roofn3D: Deep Learning Training Data For 3D Building Reconstruction. International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences 42.

Woo, H., Kang, E., Wang, S., Lee, K.H., 2002. A New Segmentation Method for Point Cloud Data. International Journal of Machine Tools and Manufacture 42, 167-178.

Wu, Z., Song, S., Khosla, A., Yu, F., Zhang, L., Tang, X., Xiao, J., 2015. 3D Shapenets: A Deep Representation for Volumetric Shapes, Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1912-1920.

Xiong, B., Elberink, S.O., Vosselman, G., 2014. Building Modeling from Noisy Photogrammetric Point Clouds. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2, 197.

The paper was presented at 13th 3D GeoInfo Conference, 1–2 October 2018, Delft, The Netherlands.

(No Ratings Yet)

(No Ratings Yet)

Leave your response!