| Mapping | |

Tracking cities from the sky with Artificial Intelligence

Artificial intelligence presented itself as an interesting direction that could potentially help overcome the challenges of automating a complex problem |

|

|

|

|

Cameras that fly blind

The UN estimates that more than 55% of the world’s population – that is, over 4.07 billion people – currently lives in urban areas. This proportion is rapidly growing, especially in developing nations, and is projected to reach 68% by 2050. Urban living offers several distribution and economic efficiencies. It often represents an increased access to resources, manufactured goods, services, and infrastructure which attracts migrants seeking an increased quality of life for themselves and their families. With such a large, concentrated human population, it is critical to track the growth of urban centers and check their ability to access resources, their vulnerability, and the connectivity needs of the people living there. Additionally, in case of unforeseen events like natural disasters, it becomes critical to map affected areas for disaster relief and mitigation efforts.

The distribution efficiency of cities is based on a simple principle: a piece of infrastructure has limited geographical reach. There is only a certain distance people will be willing to travel to benefit from the services offered by that infrastructure. Thus it is advantageous to encourage high density settlement so that fewer pieces of infrastructure need to be built. Unfortunately, the downside of high density living is that there are increased challenges in essential resource distribution like water, electricity, and sewage services. It can also be more difficult to control the spread of disease, prevent crime, and provide timely emergency response. This necessitates advanced and thorough planning and preparedness by the governing bodies of these areas. Unfortunately, urban growth in several parts of the world happens unplanned, and often in the economically disadvantaged sections of society that have sometimes been forced by circumstances to make this migration. These populations are more vulnerable to economic and natural hardships. Thus is it critical that growth in urban areas be continually monitored by means of surveys and updated maps. Not only can this help drive policy and governance decisions, but current mapping data is crucial for any kind of disaster response.

Manually mapping and surveying can be a prohibitively expensive endeavor. With the advent of space-based imagery, remote sensing has become the backbone of map generation and a crucial input for any decision support systems in use by local governing bodies. The most common type of space-based imagery is produced by optical imaging sensors.

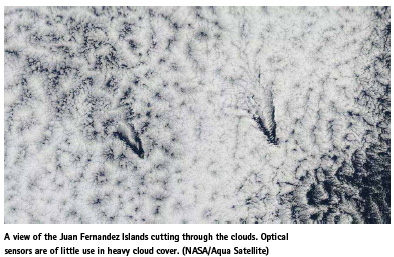

These sensors are not unlike the digital cameras most of us are familiar with. They essentially take a photograph of the land surface as they fly past in their orbits several hundred kilometers above ground. Optical imaging satellites have become ubiquitous for mapping and monitoring the Earth’s surface; however, their operations are easily impeded by inclement weather. This becomes a matter of concern for tropical countries, like India, where the monsoon season lasts up to 5 months, during which the probability of obtaining a cloud-free image is quite low. Thus it can be very difficult to use these sensors for operational urban monitoring. Given that the fastest growing populations reside in cities in these tropical regions, this is a serious impediment.

This issue is amplified in case of a natural calamity like a hurricane where the ongoing weather phenomenon makes optical imaging futile. While it is crucial to obtain up to the minute information about the affected populations in a situation like a heavy rain induced flood, because these sensors can not image though the clouds, imagery can only be obtained after the rains have subsided and the clouds have cleared. This is often too late and leaves the disaster response infrastructure stressed. If flooding could be monitored as it happens, warnings and instructions could be issued at a much more granular level. An example would be issuing the instruction of evacuation only to low lying areas, or areas immediately at risk, while avoiding spreading panic in areas currently deemed safe. This can prevent critical transport routes from becoming unnecessarily crowded. Additionally, disaster mitigation teams can better allocate their resources to the most critical areas – optimally serving the area.

Let there be electromagnetic waves

Over the years, several radar imaging satellites have been launched by government agencies and private entities for disaster response and monitoring, especially in the monsoon seasons. Radar satellites are active sensors, which carry their own source of illumination. They operate by pulsing a target area of the ground with electromagnetic energy, followed by coherent reception of the reflected pulse from the target. As they pass in their orbits, they are able to look at the ground target from several different observation angles, and later coherently sum the pulses from the target. This correlation of several observations is called synthetic aperture processing, and leads to improvement in resolution of the image. By increasing how long the target is observed as the sensor passes, or the dwell time, a more detailed image can be created independent of the size of antenna or sensing wavelength. This makes radar sensors incredibly flexible. Radio waves can easily pass through clouds and other atmospheric impediments like aerosols. Thus, radar satellites can image through clouds. Additionally, since they carry their own source of illumination, they can operate at night, making them highly versatile and apt for disaster response and geo-intelligence surveillance. While radar images can be ideal for imaging and monitoring urban areas in high resolution, in-inhibited by the monsoon seasons, they do have a downside: radar images can be very difficult to interpret. Unlike optical sensors, which passively image an area directly under them, radar sensors involve pulsing energy at a target, and this must be done at a slant angle to avoid confusing echoes from equidistant points under the sensor. This configuration leads to several challenging geometric artifacts in the images. Tall buildings and mountains appear to “fold over” as echos from elevated surfaces and the base return at the same time. In addition, as the waves travel through the air, they interact with each other constructively and destructively leading to a speckled effect in the final imagery. This speckle is characteristic of all coherent imaging media, like ultrasound, and can make algorithmic interpretation of these images challenging.

The difficulty of interpretation is compounded for advanced imaging modalities like polarimetric radars. In this mode, the radar transmits alternate pulses with closely controlled polarization configurations, and receives each coherently. While this allows much more detailed characterization of the target, it also exponentially increased the difficulty of interpretation. For instance, an urban building can be expected to return a strong echo in vertical polarizations. But if you have a situation with closely spaced buildings, the vertical and horizontal returns may interfere to form a helical return. Of note here is that several polarimetric radar constellations in current orbit like Sentinel-1 and the soon to be launched NISAR sensor represent significant public investment and are funded by government agencies. The imagery is freely distributed and plentiful, and being able to fully exploit the information captured by these systems is in the taxpayer’s best interest.

Clever but slow humans

Often, a highly skilled trained professional is needed who is intimately familiar with radar scattering physics to exploit the information available from these sensors. This is especially true for urban areas, which are challenging to identify because of their complex shapes which lead to multiple scattering, and sometimes to the radar pulse being reflected away from the sensor. Manual interpretation is limited to the by the capacity of the individual expert to parse data, and hiring more experts can be prohibitively expensive. Besides, it’s difficult to maintain mapping quality and timeline assurances when manual processes are used for production without additional steps and efforts. Both for the sake of quality and cost, it’s desirable to automate urban mapping.

However, due to the unique challenges of radar images, it is difficult to apply traditional image processing and computer vision algorithms to radar data. Even simplistic edge detection and filtering algorithms are unable to produce satisfactory output because of the challenging image artifacts. Artificial intelligence presented itself as an interesting direction that could potentially help overcome the challenges of automating a complex problem. Several recent advances and successes of the fledgling field recently prompted researchers Dr. Shaunak De and Dr. Avik Bhattacharya at the Indian Institute of Technology Bombay to explore a solution to radar analysis using the technology.

First was the problem of even finding urban areas. Due to the complex nature of radar scattering, even something relatively simple like finding all built-up structures can be a daunting task. The first breakthrough came in this direction when the researchers identified that at scale of the sensing wavelength, architectural details of the buildings crease to matter, and the radar is more or less only sensitive to the coarse shape of the building. While this idea, at its core is relatively simple, it holds the key simplification which enables us to teach a computer algorithm the physics of radar scattering. An interesting side-note, the inspiration behind this idea comes from a computer city management simulation game called SimCity. The researchers noticed that while scrolling though the rendered city areas in the game, they were able identify different urban neighborhoods faster if they ignored the details in the renders and instead focuses on overall building and neighborhood geometry. If it helps our own cognitive systems, it’s only logical that it would help an artificial one.

Teaching a computer physics

To implement this idea, they created several million 3-D simulations of radar scattering in common urban area structures like a cluster of houses, or a pair of skyscrapers. These models were also rotated about the radar line of sight to simulate the effect of a change in orbits or sensing geometry. Real scattering targets from different urban radar acquisitions were also selected as training data. They then trained a deep learning algorithm to learn the associations between the building geometry and the radar scattering.

Deep learning is a technology that aims to simulate the functions of clusters of biological neurons and aims to be able to learn concepts similar to how our own cognitive systems grow, train and learn over time. It learns much like a child does, through guided training, trial and error, rewards for “good” or correct results, and punishments for contrary ones.

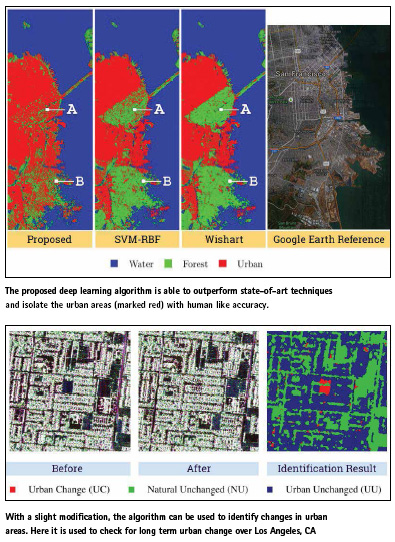

In essence, the algorithm was taught the basic principles of radar scattering, just like a human expert would be trained. This allowed it to the algorithm to generalize the nature of radar targets in urban areas much better and be able to respond to targets that it had never seen in its training by inferring from scattering physics. The algorithm has been demonstrated to outperform state of the art techniques and has human-like target identification accuracy. Although the training is a time-consuming process, once the model is created it can be applied to a large number of radar images in seconds. Thus it is possible to now efficiently, cheaply and accurately map urban targets in large swaths of polarimetric radar data.

One advantage of Neuro-similar algorithms like deep learning are that although they are computationally intensive to train, they are incredibly efficient to test. This means that while teaching the algorithm takes a long time and considerable computational resources, the algorithm is able to perform the taught task fairly quickly, much like actual human workers. The advantage to this is while it can take a bit of tweaking and work by an expert data-scientist to train, in operation the method is extremely fast and stable. In addition a lot of the computation can be favorably performed on consumer graphics processing hardware that are commonly used to play video games, alleviating any expensive hardware requirements to make this system operational.

Sentinels in the sky

Such an algorithm can be tasked with monitoring the terabytes of information being received from orbiting radar satellites and can automatically generate maps of urban areas, monitor urban sprawl, temporary and unorganized settlement, illegal encroaching. This can in-turn help urban planners meet the needs of these populations and distribute resources fairly and efficiently. Perhaps the most compelling use case for this technology is in case of natural calamities like hurricanes, where you want to quickly generate change maps of urban areas so the destruction of property can be monitored and disaster relief efforts can be correctly directed. In this case, an optical image is completely impeded by the weather system, and radar data coupled with this automated algorithm to generate urban and urban change maps can be lifesaving. The technology has application in military surveillance. Due to the ability of the radar to image at night, the algorithm can automatically detect bunkers and encampments constructed by insurgents in the cover of the night, helping plan a course of action security forces.

Once routine maps of cities are created, several multi temporal studies such as change detection and monitoring can be done. These can be important inputs for several economic monitoring projects and indices. In fact with a slight modification to the current algorithm, automated changed urban area maps can be generated as well. Instead of teaching the algorithm to only identify between urban and non-urban areas, it can be presented examples in pairs. Some, in which no significant change has occurred between the areas in the pairs, and some in which construction or destruction has occurred. Again by learning the differences in scattering mechanisms this time the algorithm can be used to quickly mark changed urban areas in pairs of images – making it valuable for long term city monitoring, as well as disaster recovery efforts where significant damage has occurred in urban areas.

In the future this approach can be extended to several other applications like crop identification and snow-cover monitoring. More applications will allow better understanding of dynamic systems on earth independent of the weather systems in the atmosphere and will fully exploit the potential of the electronic sentinels keeping a watch over us from space.

(No Ratings Yet)

(No Ratings Yet)

Leave your response!